Researchers from Tsinghua University have developed a groundbreaking method called ADELIE (Aligning large language moDELs on Information Extraction) that harnesses the power of large language models (LLMs) to revolutionize information extraction (IE) tasks. By leveraging a specialized dataset called IEInstruct and employing innovative training strategies, ADELIE sets a new standard for LLM performance in both closed and open IE domains.

Bridging the Gap Between LLMs and Precise IE

Information extraction, a critical field in artificial intelligence, transforms unstructured text into structured, actionable data. While modern LLMs possess remarkable language understanding capabilities, they often struggle to execute the nuanced instructions required for accurate IE, particularly in closed IE tasks that demand strict adherence to predefined schemas.

Traditionally, researchers have employed strategies like prompt engineering to assist LLMs in IE tasks without modifying underlying model parameters. However, the research community has recognized the urgent need for a more effective approach to enhance LLMs’ understanding and execution of structured tasks.

ADELIE: A Novel Approach

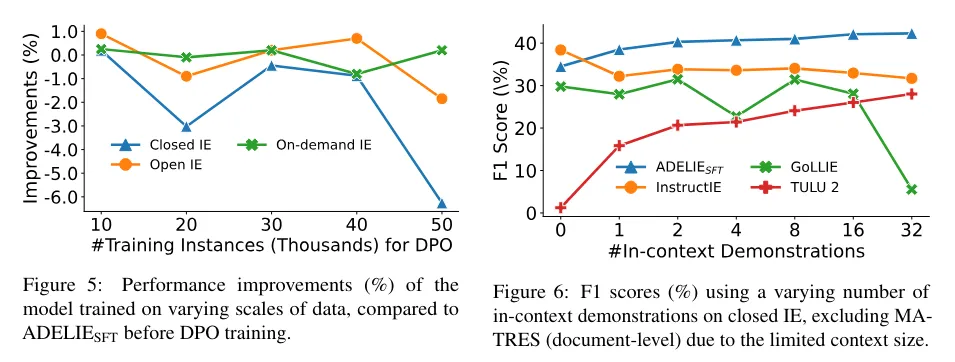

In response to this challenge, researchers from Tsinghua University introduced ADELIE, a groundbreaking method that combines supervised fine-tuning with an innovative Direct Preference Optimization (DPO) strategy. This unique blend enables the model to align more closely with the complexities of human-like IE processing.

ADELIE leverages the IEInstruct dataset, which comprises over 83,000 instances across various IE formats, including triplets, natural language responses, and JSON outputs. Initial training involves a mix of IE-specific and generic data, using the LLAMA 2 model over 6,306 gradient steps to ensure the retention of broad linguistic capabilities alongside specialized IE performance.

Benchmark-Setting Results

Performance evaluations reveal that ADELIE models, ADELIESFT and ADELIEDPO, outperform state-of-the-art alternatives across multiple metrics and task types. In closed IE tasks, ADELIESFT demonstrates an average F1 score improvement of 5% over standard LLM outputs. The advantages are even more pronounced in open IE, with ADELIE models surpassing competitors by 3-4% margins in robustness and extraction accuracy.

The models also exhibit a nuanced understanding of user instructions in on-demand IE scenarios, translating into highly accurate data structuring. These impressive results underscore the potential of ADELIE to set new standards in information extraction, making it a valuable tool for a wide range of applications, from academic research to real-world data processing.

Preserving General Capabilities

One of the key advantages of ADELIE is its ability to enhance LLMs’ IE performance without compromising their general language capabilities, which is often a concern with task-specific tuning. By carefully integrating IE-specific training with generic data, ADELIE ensures that the models maintain their broad linguistic proficiency while excelling in targeted IE tasks.

Conclusion

ADELIE’s methodical training and optimization strategies demonstrate the power of aligning LLMs with information extraction tasks. This focused approach to data diversity and instruction specificity bridges the gap between human expectations and machine performance in IE. As researchers continue to push the boundaries of language models, ADELIE stands out as a groundbreaking contribution that paves the way for more precise and effective information extraction in the years to come.