Large language models (LLMs) have demonstrated remarkable capabilities across a wide range of applications. However, aligning these models with human preferences and values remains a critical challenge.

Over the past year, several promising optimization techniques have emerged to tackle this alignment problem:

- Direct Preference Optimization (DPO)

- Identity Preference Optimization (IPO)

- Kahneman-Tversky Optimization (KTO)

Let’s take a closer look at how each of these methods works and what the latest research reveals about their effectiveness.

What is Direct Preference Optimization?

Direct Preference Optimization (DPO) recasts the alignment problem as a simple loss function that can be directly optimized on a preference dataset. Unlike traditional reinforcement learning approaches, DPO eliminates the need for an explicit reward model.

This makes the training process more stable and computationally efficient. DPO has already been successfully applied to align models like Zephyr and Intel’s NeuralChat.

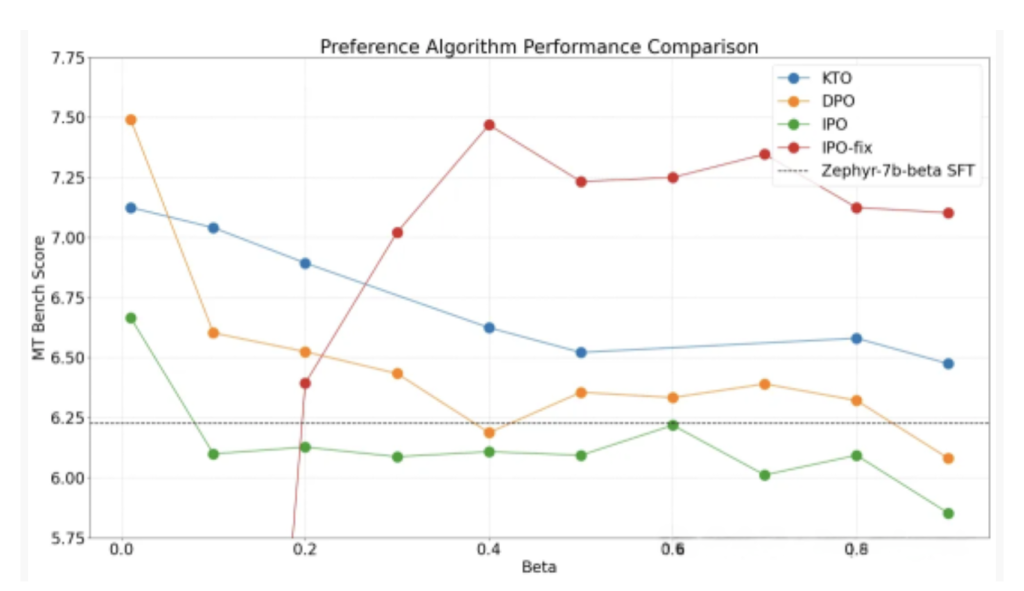

In experiments comparing DPO, IPO and KTO, DPO consistently achieved the best performance, outperforming the other methods in most settings. The key is tuning the β hyperparameter, which controls the weight of the reference model’s preferences.

How Identity Preference Optimization Improves Robustness

A potential drawback of DPO is that it can quickly overfit on the preference dataset. To address this, Identity Preference Optimization (IPO) introduces a regularization term into the DPO loss function.

This helps maintain a balance between optimizing for preferences and generalizing beyond the training data. IPO allows the model to converge without relying on techniques like early stopping.

When the IPO loss is implemented correctly by averaging log-likelihoods of completions, IPO performs on par with DPO and better than KTO in the paired preference setting. However, the choice of regularization parameter is crucial to IPO’s success.

Kahneman-Tversky Optimization: A Novel Approach

Kahneman-Tversky Optimization (KTO) takes a completely different approach by abandoning pairwise preference data altogether. Instead, it defines a loss function based solely on single examples labeled as “good” or “bad”, such as 👍 or 👎 reactions in a chat interface.

This leverages insights from prospect theory on how humans perceive random variables in a biased but predictable way. KTO only requires knowing if an output is desirable or undesirable for a given input, making it much easier to deploy in real-world scenarios where such binary feedback is abundant.

Surprisingly, KTO-aligned models match or exceed the performance of preference-based methods like DPO at scales from 1B to 30B parameters. The key advantage is KTO’s feasibility in practice, as it requires less specific data compared to pairwise preference methods.

Parameter Configuration

The alignment-handbook provides a simple way to configure individual experiments, with these parameters used to configure the run_dpo.py script:

# Model arguments

model_name_or_path: teknium/OpenHermes-2.5-Mistral-7B

torch_dtype: null

# Data training arguments

dataset_mixer:

HuggingFaceH4/orca_dpo_pairs: 1.0

dataset_splits:

- train_prefs

- test_prefs

preprocessing_num_workers: 12

# Training arguments with sensible defaults

bf16: true

beta: 0.01

loss_type: sigmoid

do_eval: true

do_train: true

evaluation_strategy: steps

eval_steps: 100

gradient_accumulation_steps: 2

gradient_checkpointing: true

gradient_checkpointing_kwargs:

use_reentrant: False

hub_model_id: HuggingFaceH4/openhermes-2.5-mistral-7b-dpo

hub_model_revision: v1.0

learning_rate: 5.0e-7

logging_steps: 10

lr_scheduler_type: cosine

max_prompt_length: 512

num_train_epochs: 1

optim: adamw_torch

output_dir: data/openhermes-2.5-mistral-7b-dpo-v1.0

per_device_train_batch_size: 8

per_device_eval_batch_size: 8

push_to_hub_revision: true

save_strategy: "steps"

save_steps: 100

save_total_limit: 1

seed: 42

warmup_ratio: 0.1A similar base configuration file was created for the Zephyr experiment.

The chat template is automatically inferred from the base chat model, with OpenHermes-2.5 using the ChatML format and Zephyr using the H4 chat template. Alternatively, if you want to use your own chat format, the 🤗 tokenizers library now enables user-defined chat templates via jinja format strings:

{% for message in messages %} {% if message['role'] == 'user' %} {{ '<|user|>' + message['content'] + eos_token }} {% elif message['role'] == 'system' %} {{ '<|system|>' + message['content'] + eos_token }} {% elif message['role'] == 'assistant' %} {{ '<|assistant|>' + message['content'] + eos_token }} {% endif %} {% if loop.last and add_generation_prompt %} {{ '<|assistant|>' }} {% endif %} {% endfor %}This will format the conversation as follows:

<|system|>

# You are a friendly chatbot that always responds in a pirate style. <|/s>

<|user|>

# How many helicopters can a person eat at once? <|/s>

<|assistant|>

# Ah, my dear mate! But your question is a mystery! A person cannot eat a helicopter at once, because helicopters are not food. They are made of metal, plastic, and other materials, not food!Hyperparameter Scanning

The DPO, IPO, and KTO methods were trained using the loss_type parameter through TRL’s DPOTrainer, with β values ranging from 0.2 to 0.9. This parameter was included because we observed that some alignment algorithms are particularly sensitive to it. All experiments were trained for one epoch. In each run, all other hyperparameters remained unchanged, including the random seed.

The scan was then launched on the HF Mirror cluster using the base configuration defined above:

#!/bin/bash

# Define an array containing the base configs we wish to fine tune

configs=("zephyr" "openhermes")

# Define an array of loss types

loss_types=("sigmoid" "kto_pair" "ipo")

# Define an array of beta values

betas=("0.01" "0.1" "0.2" "0.3" "0.4" "0.5" "0.6" "0.7" "0.8" "0.9")

# Outer loop for loss types

for config in "${configs[@]}"; do

for loss_type in "${loss_types[@]}"; do

# Inner loop for beta values

for beta in "${betas[@]}"; do

# Determine the job name and model revision based on loss type

job_name="$config_${loss_type}_beta_${beta}"

model_revision="${loss_type}-${beta}"

# Submit the job

sbatch --job-name=${job_name} recipes/launch.slurm dpo pref_align_scan config_$config deepspeed_zero3

"--beta=${beta} --loss_type=${loss_type} --output_dir=data/$config-7b-align-scan-${loss_type}-beta-${beta} --hub_model_revision=${model_revision}"

done

done

doneEmpirical Evaluation and Practical Insights

Experiments evaluating DPO, IPO and KTO on 7B parameter models across various datasets found:

- Choosing the right hyperparameters, especially the β value controlling reference model preference weight, is crucial for all methods

- DPO and IPO achieve comparable results, outperforming KTO in the paired preference setting

- The optimal β value varies significantly between algorithms and models

While DPO appears to be the most robust and best performing LLM alignment algorithm currently, KTO is a promising development as it can leverage more abundant real-world data where outputs are simply rated positively or negatively.

From my experience working on language model alignment, I’ve found that thoroughly exploring the hyperparameter space is essential. A small tweak can yield substantial gains. I recommend starting with the settings from published experiments and iteratively tuning from there.

It’s also worth noting that the choice of base model matters a great deal. In the experiments discussed above, the OpenHermes-7b-2.5 model was clearly stronger out of the box, with alignment providing only a 0.3 boost to its MT Bench score. So be sure to start with the best foundation model you can for your use case.

The Future of Language Model Alignment

As we’ve seen, preference optimization techniques like DPO, IPO and KTO are enabling LLMs to behave in increasingly helpful, harmless and aligned ways. However, this remains an active area of research with ample room for improvement.

Some key open questions include:

- How can we collect higher-quality preference data more efficiently and cost-effectively?

- What other optimization objectives could further improve alignment robustness and generalization?

- How well do these techniques scale to even larger models with billions or trillions of parameters?

Personally, I’m excited to see continued innovation in learning from more diverse forms of human feedback, like the binary signals leveraged by KTO. Making alignment optimization easier and more practical will be key to unlocking the full potential of LLMs.

Stay tuned for further developments in this fast-moving field. By adopting best practices like DPO and KTO, we can create language models that are not only incredibly capable but also fundamentally aligned with human values and goals. The future looks bright indeed.

What is the significance of aligning LLMs with human preferences?

Aligning large language models (LLMs) with human preferences is crucial for creating AI systems that are more intuitive and user-friendly. This alignment helps ensure that the outputs of LLMs are relevant, ethical, and aligned with societal values, ultimately enhancing user trust and satisfaction. For more information, visit the Hugging Face blog.

How do DPO, IPO, and KTO compare in terms of performance?

Direct Preference Optimization (DPO) generally outperforms Identity Preference Optimization (IPO) and Kahneman-Tversky Optimization (KTO) in various settings, particularly in tasks requiring nuanced human feedback. DPO’s approach to directly optimizing preference data allows for more stable and effective model alignment. For detailed comparisons, refer to the arXiv paper on DPO.

What are the practical applications of KTO in real-world scenarios?

Kahneman-Tversky Optimization (KTO) is particularly effective in environments where binary feedback (good or bad) is readily available. Its simplicity makes it suitable for applications like chatbots and recommendation systems, where quick feedback loops are essential. For an in-depth look at KTO, check out the NobleFilt article.

How can organizations implement these alignment methods effectively?

Organizations can implement DPO, IPO, and KTO by first collecting relevant preference data from users and then applying the respective optimization techniques to fine-tune their LLMs. Continuous evaluation and adjustment of hyperparameters are also vital for achieving optimal performance. For guidance on implementation, see the Aman AI Journal.

What future trends can we expect in LLM alignment techniques?

Future trends in LLM alignment may include the integration of more sophisticated feedback mechanisms, the use of hybrid models that combine aspects of DPO, IPO, and KTO, and advancements in interpretability to better understand how these models align with human preferences. For insights on emerging trends, explore the latest research updates on OpenReview.