The technology for converting text information into knowledge graphs has been a favorite in the research community since its inception. The rise of large language models (LLMs) has brought more attention to this field, but the high costs associated with LLMs can be a deterrent. However, by fine-tuning and optimizing smaller models, we can find a more cost-effective solution.

Today, I would like to introduce Relik, a fast and lightweight information extraction framework developed by the Natural Language Processing team at Sapienza University of Rome.

1. Information Extraction Process

Without relying on LLMs, the information extraction process typically includes:

The above diagram presents the complete information extraction process, starting with a simple text input: “Tomaz likes to write blog posts. He is particularly interested in drawing diagrams.” The process begins with coreference resolution, identifying “Tomaz” and “He” as referring to the same individual. Next, Named Entity Recognition (NER) techniques identify key entities such as “Tomaz,” “Blog,” and “Diagram.”

Subsequently, the entity linking step correlates these identified entities with corresponding entries in a database or knowledge base. For example, “Tomaz” corresponds to “Tomaz Bratanic (Q12345)” and “Blog” corresponds to “Blog (Q321).” However, “Diagram” does not have a matching entry in the knowledge base.

The following relation extraction step further analyzes the connections between entities, identifying that there is a “WRITES” relationship between “Tomaz” and “Blog,” indicating that Tomaz writes blogs, and an “INTERESTED_IN” relationship between “Tomaz” and “Diagram,” suggesting his interest in diagrams.

Finally, this structured information about entities and relationships is integrated into a knowledge graph, providing an organized and easily accessible resource for subsequent data analysis or information retrieval.

In the absence of large language models (LLMs), the information extraction work typically relies on a series of specialized models to handle tasks such as coreference resolution, named entity recognition, entity linking, and relation extraction. Integrating these models requires additional effort and meticulous tuning, but this approach can effectively reduce costs. By using and optimizing these smaller, task-specific models, we can overall decrease the costs of system construction and maintenance. For more advanced strategies, refer to our guide on Master RAG Optimization in 2024.

Code is available on GitHub: LlamaIndex Relik GitHub Repository.

2. Environment Setup and Data Preparation

It is recommended to use an isolated Python environment, such as Google Colab, to manage project dependencies.

Next, configure the Neo4j graph database to store the parsed data. Neo4j Aura is recommended as it provides convenient free cloud services and is perfectly compatible with Google Colab notebooks.

Once the database is set up, you can establish a database connection using LlamaIndex.

from llama_index.graph_stores.neo4j import Neo4jPGStore

username = "neo4j"

password = "rubber-cuffs-radiator"

url = "bolt://54.89.19.156:7687"

graph_store = Neo4jPGStore(

username=username,

password=password,

url=url,

refresh_schema=False

)Dataset

Here, we will use a news dataset for analysis, which is obtained through the Diffbot API.

import pandas as pd

NUMBER_OF_ARTICLES = 100

news = pd.read_csv("https://raw.githubusercontent.com/tomasonjo/blog-datasets/main/news_articles.csv")

news = news.head(NUMBER_OF_ARTICLES)3. Technical Implementation

The information extraction process begins with coreference resolution, which is tasked with identifying different expressions referring to the same entity in the text.

Currently, there are relatively few open-source models available for coreference resolution. After some comparisons, we choose to use spaCy’s Coreferee. Note that using Coreferee may encounter some dependency issues.

Load the coreference resolution model in spaCy using the following code:

import spacy

import coreferee

coref_nlp = spacy.load('en_core_web_lg')

coref_nlp.add_pipe('coreferee')The Coreferee model can identify clusters of expressions in the text that refer to the same entity or group of entities. To rewrite the text based on these identified clusters, a custom function needs to be implemented.

def coref_text(text):

coref_doc = coref_nlp(text)

resolved_text = ""

for token in coref_doc:

repres = coref_doc._.coref_chains.resolve(token)

if repres:

resolved_text += " " + " and ".join(

t.text if t.ent_type_ == "" else [e.text for e in coref_doc.ents if t in e][0]

for t in repres

)

else:

resolved_text += " " + token.text

return resolved_textTest this function to ensure that the model and dependencies are set up correctly:

print(coref_text("Tomaz is so cool. He can solve various Python dependencies and not cry."))In this example, the model successfully recognizes that “Tomaz” and “He” refer to the same entity. By applying the coref_text function, “Tomaz” replaces “He.”

It should be noted that this rewriting mechanism does not always produce grammatically correct sentences, as it employs a direct replacement logic to handle clusters of entities in the text. Nevertheless, this method is sufficiently effective for most application scenarios.

Now, apply this coreference resolution technique to our news dataset and convert it into LlamaIndex document format:

from llama_index.core import Document

news["coref_text"] = news["text"].apply(coref_text)

documents = [

Document(text=f"{row['title']}: {row['coref_text']}")

for i, row in news.iterrows()

]Entity Linking and Relation Extraction

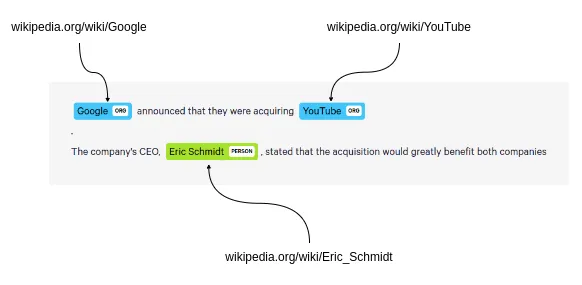

The Relik library integrates both entity linking and relation extraction functionalities, allowing these two techniques to be applied in conjunction. During entity linking, Relik relies on Wikipedia to achieve precise mapping of text entities to encyclopedia entries.

In terms of relation extraction, Relik helps us convert raw unstructured data into organized structured information by identifying and defining the relationships between entities in the text.

If you are using the free version of Colab, select the relik-ie/relik-relation-extraction-small model, which is specifically responsible for relation extraction. If you have a Colab Pro version or plan to run on a higher-performance local machine, consider trying the relik-ie/relik-cie-small model, which includes both relation extraction and entity linking functionalities.

from llama_index.extractors.relik.base import RelikPathExtractor

relik = RelikPathExtractor(

model="relik-ie/relik-relation-extraction-small"

)Additionally, we must define the embedding model to be used for embedding entities and the LLM for the question-and-answer process:

import os

from llama_index.embeddings.openai import OpenAIEmbedding

from llama_index.llms.openai import OpenAI

os.environ["OPENAI_API_KEY"] = "sk-"

llm = OpenAI(model="gpt-4o", temperature=0.0)

embed_model = OpenAIEmbedding(model_name="text-embedding-3-small")Note that during the construction of the knowledge graph, large language models (LLMs) will not be utilized.

4. Building and Applying the Knowledge Graph

Now that all preparations are complete, we can create a PropertyGraphIndex instance and input the news documents into the knowledge graph.

Furthermore, to extract relationships from the documents, we need to set the relik model as the value for the kg_extractors parameter.

from llama_index.core import PropertyGraphIndex

index = PropertyGraphIndex.from_documents(

documents,

kg_extractors=[relik],

llm=llm,

embed_model=embed_model,

property_graph_store=graph_store,

show_progress=True

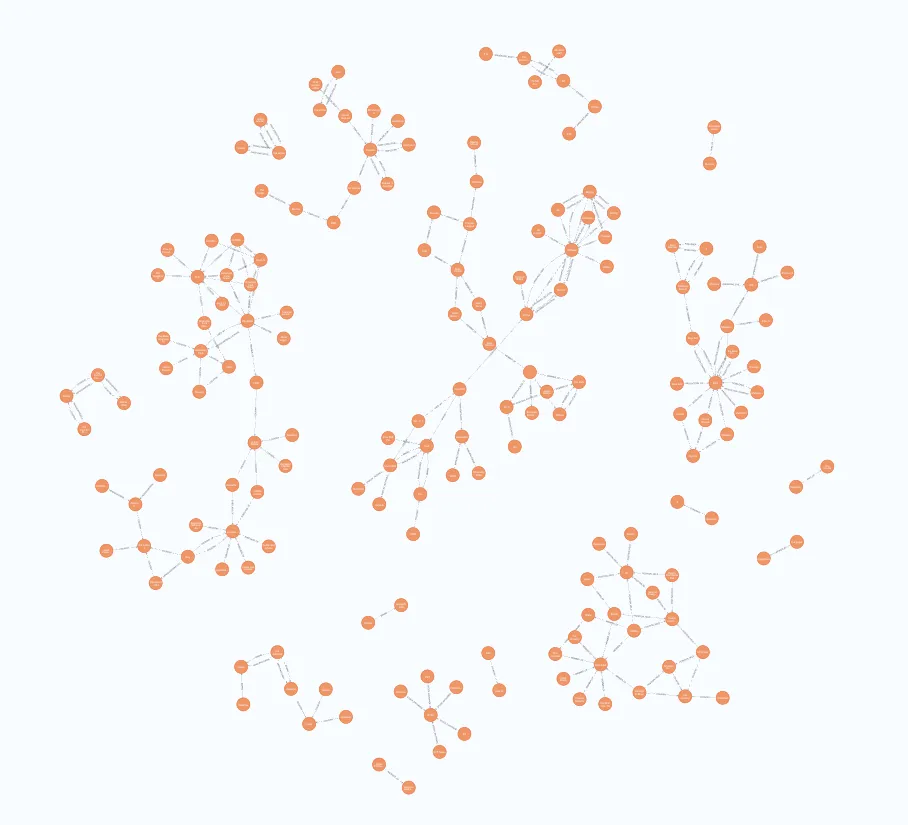

)Once the graph is built, you can open the Neo4j browser to verify the imported graph. Run the following Cypher statement to obtain a similar visualization:

MATCH p=(:__Entity__)--(:__Entity__)

RETURN p LIMIT 250For insights on integrating RAG with graph technologies, refer to GraphRAG: The Next-Gen RAG Powering Smarter AI Search.

5. Implementing Q&A Functionality

With LlamaIndex, conducting Q&A is now straightforward. You can utilize the built-in graph retriever to pose questions directly:

query_engine = index.as_query_engine(include_text=True)

response = query_engine.query("What happened at Ryanair?")

print(str(response))This is where the defined LLM and embedding model come into play.

6. Conclusion

Building knowledge graphs without relying on large language models is practical, cost-effective, and efficient. By optimizing and adjusting smaller, task-specific models within the Relik framework, retrieval-augmented generation applications can efficiently extract information.

Entity linking serves as a crucial step, ensuring that the identified entities are accurately mapped to corresponding entries in the knowledge base, thereby maintaining the integrity and usability of the knowledge graph.

To dive deeper into the implications of RAG technologies, check out 2024’s Ultimate Guide to RAG: AI Revolution & Document Parsing.

With the help of the Relik framework and the Neo4j platform, we can construct powerful knowledge graphs that facilitate complex data analysis and retrieval tasks while avoiding the high costs associated with deploying large language models. This approach not only makes advanced data processing tools more accessible but also drives innovation and efficiency in the information extraction process.