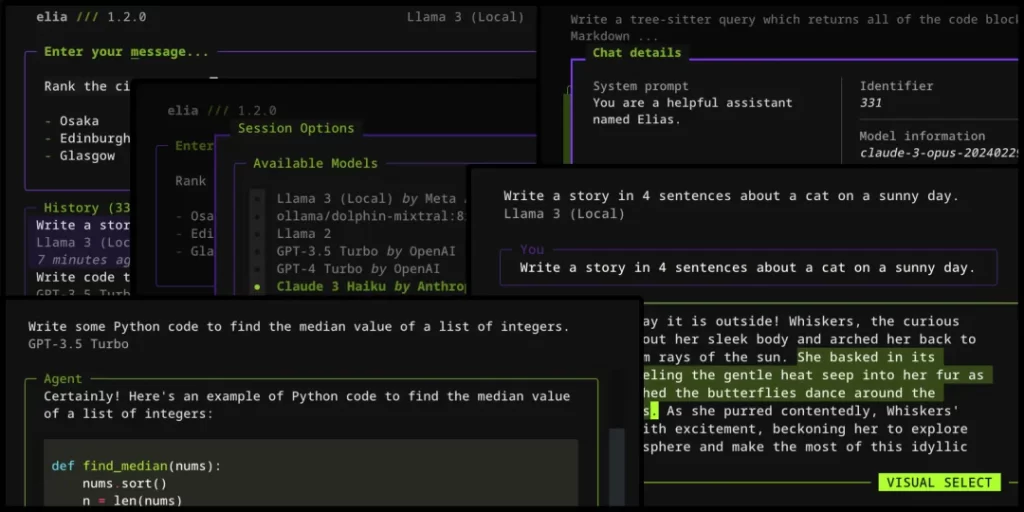

Elia is a lightweight, keyboard-focused terminal application designed to facilitate seamless interactions with Large Language Models (LLMs). This innovative tool bridges the gap between users and advanced AI models, offering a streamlined interface for efficient communication.

Supported Models

Elia boasts compatibility with a wide range of LLMs, including:

- ChatGPT

- Claude

- Local models such as:

- Llama 3

- Phi 3

- Mistral

- Gemma

This diverse support allows users to leverage multiple AI models for various tasks and compare responses across different platforms.

Key Features and Use Cases

Local Conversation Storage

Elia stores conversations locally, enabling users to:

- Review past interactions

- Analyze dialogue patterns

- Track progress over time

This feature is particularly useful for researchers, writers, and developers who need to reference previous conversations or track the evolution of their queries and the AI’s responses.

Multi-Model Support

The ability to interact with multiple LLMs through a single interface offers several advantages:

- Easily compare responses from different models

- Identify strengths and weaknesses of various LLMs

- Choose the most appropriate model for specific tasks

Rapid Input and Information Retrieval

Elia’s keyboard-centric design makes it ideal for scenarios requiring quick input and fast information access, such as:

- Programming: Quickly look up syntax or debugging tips

- Writing: Generate ideas or overcome writer’s block

- Research: Rapidly explore concepts or gather preliminary information

Installation and Setup

Installing Elia

To install Elia, use the pipx tool, which allows for isolated installation of Python applications:

pipx install elia-chatSetting Up Environment Variables

Depending on the models you intend to use, you may need to set up one or more environment variables. Common variables include:

OPENAI_API_KEYfor ChatGPTANTHROPIC_API_KEYfor ClaudeGEMINI_API_KEYfor Google’s Gemini models

Consult the documentation for each specific model to ensure proper configuration.

Quick Start Guide

Basic Usage

Launch Elia from the command line:

eliaInline Chat Mode

For a quick interaction without entering full-screen mode, use the -i or --inline option:

elia -i "What is the Zen of Python?"Full-Screen Mode

Start a new chat in full-screen mode with a specific query:

elia "Tell me a cool fact about lizards!"Specifying Models

Use the -m or --model flag to choose a specific LLM:

elia -m gpt-4oAdvanced Usage

Combine options for more complex interactions. For example, to start an inline chat with Gemini 1.5 Flash:

elia -i -m gemini/gemini-1.5-flash-latest "How do I call Rust code from Python?"Running Local Models

Elia also supports interaction with locally-hosted models. To set this up:

- Install Ollama, a tool for running LLMs locally

- Pull your desired model (e.g.,

ollama pull llama3) - Start the Ollama server:

ollama serve - Add the model to Elia’s configuration file (see Configuration section)

Configuration

Elia offers extensive customization options through its configuration file. To access the file location, use ctrl+o in the options window.

Sample Configuration

# Default model selection on startup

default_model = "gpt-4o"

# System prompt on launch

system_prompt = "You are a helpful assistant who talks like a pirate."

# Syntax highlighting theme for code in messages

message_code_theme = "dracula"

# Example: Adding local Llama 3 support

[[models]]

name = "ollama/llama3"

# Example: Model running on a local server (e.g., LocalAI)

[[models]]

name = "openai/some-model"

api_base = "http://localhost:8080/v1"

api_key = "api-key-if-required"

# Example: Adding a Groq model with additional settings

[[models]]

name = "groq/llama2-70b-4096"

display_name = "Llama 2 70B"

provider = "Groq"

temperature = 1.0

max_retries = 0

# Example: Multiple instances of one model (e.g., work vs. personal OpenAI accounts)

[[models]]

id = "work-gpt-3.5-turbo"

name = "gpt-3.5-turbo"

display_name = "GPT 3.5 Turbo (Work)"

[[models]]

id = "personal-gpt-3.5-turbo"

name = "gpt-3.5-turbo"

display_name = "GPT 3.5 Turbo (Personal)"This configuration allows for extensive customization, including default model selection, system prompts, and even multiple instances of the same model for different use cases.

Additional Features

Importing from ChatGPT

Elia allows users to import conversations from ChatGPT:

- Export your ChatGPT conversations as a JSON file

- Use the

importcommand in Elia:

elia import 'path/to/conversations.json'This feature enables seamless transition from ChatGPT to Elia, preserving your conversation history.

Database Management

To reset Elia’s database and start fresh:

elia resetUse this command with caution, as it will erase all stored conversations.

Uninstallation

If you need to remove Elia, use the following command:

pipx uninstall elia-chatConclusion

Elia represents a significant advancement in how users interact with Large Language Models. Its terminal-based, keyboard-centric approach offers a fast and efficient way to leverage the power of AI for various tasks. Whether you’re a developer, researcher, or writer, Elia provides a flexible and powerful tool for harnessing the capabilities of modern LLMs.

As the landscape of AI and language models continues to evolve, tools like Elia play a crucial role in making these technologies more accessible and user-friendly. By offering support for both cloud-based and local models, Elia caters to a wide range of users and use cases, from those seeking cutting-edge AI capabilities to those prioritizing data privacy and offline functionality.

Remember to check the official GitHub repository for the most up-to-date features and instructions, as the project may continue to evolve and improve over time.