We are on the cusp of realizing that to do anything meaningful with GenAI, you can’t just rely on autoregressive LLMs to make decisions. I know what you’re thinking: “RAG is the answer.” Or fine-tuning, or GPT-5.

Yes. Vector-based RAG and techniques like fine-tuning can help. They’re good enough for some use cases already. But there’s another class of use case where these techniques hit a ceiling. Vector-based RAG (like fine-tuning) increases the probability of many correct answers to questions. However, neither technique can guarantee the answer is correct. They don’t leave you with many clues as to why they made a particular decision.

The Shift to Knowledge Graphs

Back in 2012, Google launched their 2nd generation search engine and published a landmark blog post titled “Introducing the Knowledge Graph: things, not strings.” They found that if they used a knowledge graph to organize all the things the strings on all those web pages represented and did all the string processing, the potential for a quantum leap in capability was there. We’re seeing the same pattern in GenAI today. Many GenAI projects are hitting ceilings where the quality of results is limited by the fact that the solutions they’re using deal with strings, not things.

Today, the AI engineers and academic researchers at the cutting edge are finding the same thing Google did: the secret to breaking through this ceiling is knowledge graphs. In other words, infusing knowledge about things into statistical, text-based techniques. It’s like any other kind of RAG except that in addition to a vector index, it also calls a knowledge graph. Or put another way, GraphRAG!

What is a Graph?

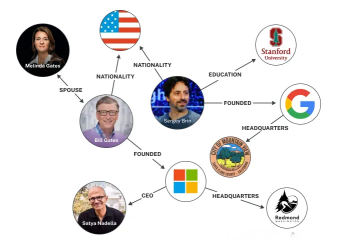

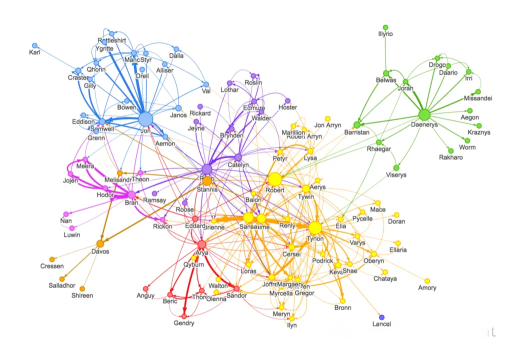

Let’s be clear, when we say Graph, we mean something like this:

Or this:

Or this:

Interestingly, Transport for London recently deployed a graph-driven digital twin to improve incident response and reduce congestion.

In other words, not a chart.

If you want to dive deep into charts vs. knowledge graphs, I’d point you to Neo4j’s GraphAcademy or Andrew Ng’s Deeplearning.ai course “RAG with Knowledge Graphs.” We won’t belabor definitions here, but will assume you have a basic understanding of graphs.

If you understand the images above, you’ll get how to query the underlying knowledge graph data (stored in a graph database) in a RAG pipeline. That’s what GraphRAG is all about.

Two Types of Knowledge Representation: Vectors and Graphs

At the core of a typical RAG is a vector search that takes a piece of text and returns conceptually similar text from a candidate body of written material. This is a delightful automation and very useful for basic search.

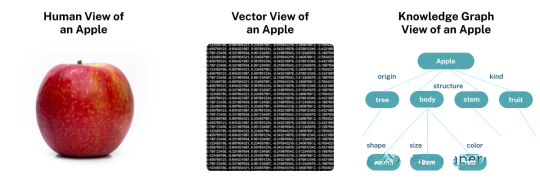

Each time you do this, you probably don’t think about what the vectors look like, or what the similarity calculation is doing. Let’s look at an apple from human terms, vector terms, and graph terms:

The human representation is complex and multidimensional in a way we can’t fully capture on paper. Let’s wax poetic for a moment and imagine that this beautiful, enticing image represents the apple in all its perceptual and conceptual glory.

The vector representation of an apple is an array of numbers—a construct from the field of statistics. The magic of vectors is that they each capture the essence of their corresponding text in encoded form. However, in the context of RAG, they’re only valuable when you need to determine how similar some words are to some other words. Doing so is as simple as running a similarity calculation (aka vector math) and getting a match. But if you want to look inside the vector, understand what’s around it, grasp what’s represented in the text, or understand how any of it fits into a larger context, the vector as a representation can’t do that.

In contrast, a knowledge graph is a declarative (or in AI terms, symbolic) representation of the world. As such, it can be understood and reasoned over by both humans and machines. This is a big deal, and we’ll come back to it. What’s more, you can query, visualize, annotate, fix, and extend a knowledge graph. A knowledge graph represents your model of the world – the part of the world you’re dealing with in your domain.

GraphRAG “VS” RAG

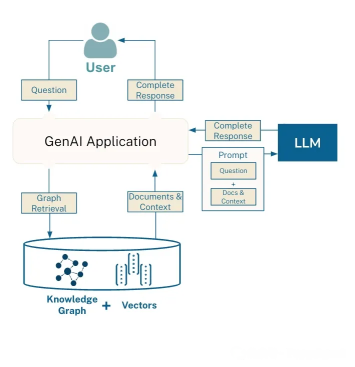

This isn’t a competition, vector and graph queries each add value in RAG in their own way. As Jerry Liu, founder of LlamaIndex, has pointed out, it’s helpful to think of GraphRAG as encompassing vectors. This is in contrast to “pure vector RAG” which is strictly based on similarity to embeddings of words in text. Fundamentally, GraphRAG is just RAG where the retrieval path includes a knowledge graph. As shown below, the core GraphRAG pattern is simple. It’s basically the same architecture as RAG with vectors, but with a knowledge graph layered in.

Here you can see that a graph query is triggered. It can optionally include a vector similarity component. You can choose to store the graph and vectors separately in two different databases, or use a graph database like Neo4j that supports vector search.

One common pattern for using GraphRAG is:

- Do a vector or keyword search to find an initial set of nodes.

- Traverse the graph to return information about related nodes.

- Optionally, re-rank documents using a graph-based ranking algorithm like PageRank.

The patterns vary by use case, and like everything else in AI today, GraphRAG is proving to be a rich field with new discoveries emerging every week. We’ll dedicate future blog posts specifically to the most common GraphRAG patterns we’re seeing today.

The GraphRAG Lifecycle

GenAI applications that use GraphRAG follow the same pattern as any RAG application, but with a “create graph” step added at the beginning:

Creating a graph is similar to chunking documents and loading them into a vector database. The good news is threefold:

- Graphs are highly iterative—you can start with a “minimum viable graph” and expand from there.

- Once data is in a knowledge graph, it becomes very easy to evolve. You can add more types of data to get the benefits of data network effects. You can also improve data quality to increase the value of application results.

- This part of the stack is rapidly improving, which means graph creation will get easier as tools become more sophisticated.

Adding the graph creation step to the previous image, you get a pipeline that looks like this:

I’ll dive into graph creation in a bit. For now, let’s set that aside and talk about the benefits of GraphRAG.

Why GraphRAG?

The advantages of GraphRAG over pure vector RAG fall into three main buckets:

- Higher accuracy and more complete answers (runtime/production benefits)

- Once a knowledge graph is created, building and subsequently maintaining a RAG application becomes easier (development time advantages)

- Better explainability, traceability, and access control (governance advantages)

Let’s dive in:

#1: Higher Accuracy and More Useful Answers

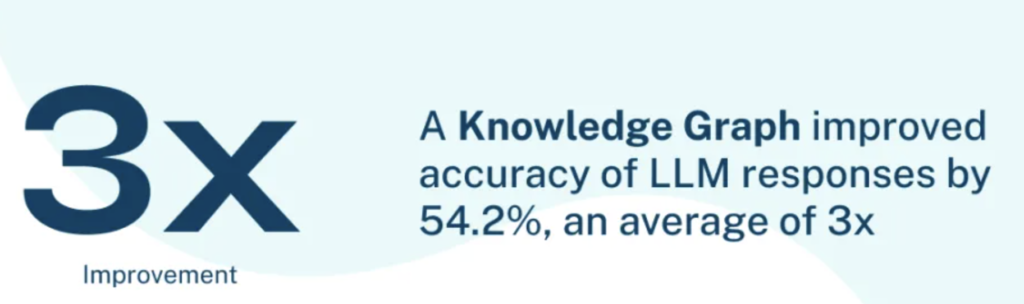

The first (and most direct, obvious) benefit we see of GraphRAG is higher quality responses. In addition to the growing number of examples we’re seeing from customers, a growing body of academic research supports this as well. Data catalog company data.world is one example. In late 2023, they published a study showing that GraphRAG increased the accuracy of LLM responses by an average of 3x across 43 business questions. The benchmark found that response accuracy improved significantly with the support of a knowledge graph.

More recently, and perhaps more widely known, is a series of posts from Microsoft starting in February 2024, which included a research blog post titled GraphRAG: Unlocking LLM Insights on Narrative Private Data, along with related research papers and software releases. Here, they observed two problems with baseline RAG (i.e. with vectors):

- Baseline RAG struggles to connect the dots. This happens when answering a question requires traversing different pieces of information via shared properties to provide new synthesized insights.

- Baseline RAG performs poorly when asked to comprehensively understand summary semantic concepts across large collections of data or even single large documents.

Microsoft found that “by using an LLM-generated knowledge graph, GraphRAG greatly improves the ‘retrieval’ part of RAG, filling the context window with higher relevance content, resulting in better answers and capturing provenance of evidence.” They also found that GraphRAG required 26% to 97% fewer tokens than other approaches, making it not only better at delivering answers, but cheaper and more scalable to do so.

Diving deeper on the accuracy theme, it’s not just about whether the answer is correct; the utility of the answer matters too. People are finding that with GraphRAG, answers are not only more accurate, but richer, more complete, and more useful. A recent paper published by LinkedIn describing the impact of GraphRAG on their customer service application is a great example. GraphRAG improved both the correctness and richness (and therefore utility) of answers to customer service questions, enabling their customer service teams to reduce the average resolution time per issue by 28.6%.

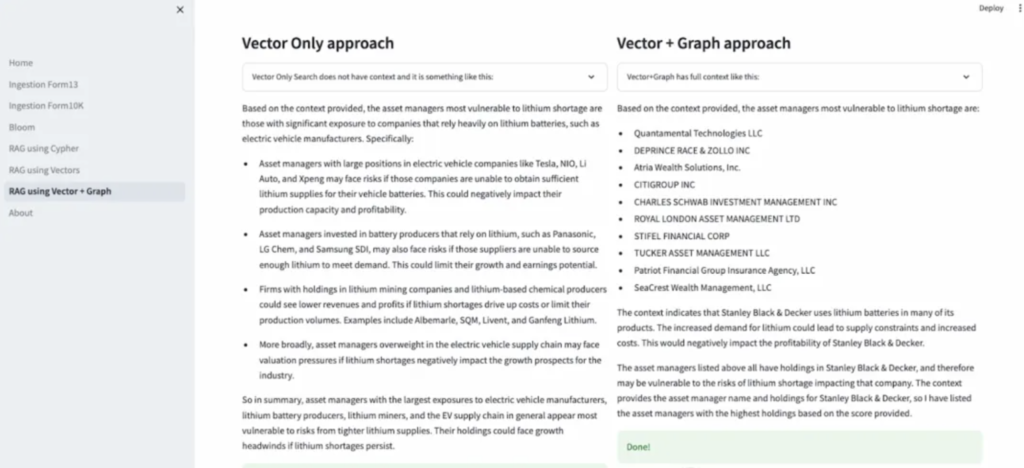

Similar examples are emerging from GenAI workshops hosted by Neo4j and partners at GCP, AWS, and Microsoft. The following example query against a collection of SEC filings illustrates well the type of answer you might get using vectors + GraphRAG vs. using vector-only RAG:

Note the difference between describing the characteristics of companies that might be impacted by a lithium shortage vs. listing the specific companies that might be impacted. If you’re an investor looking to rebalance your portfolio in the face of market changes, or a company looking to rebalance its supply chain in the face of a natural disaster, having access to the latter and not just the former could be game-changing. Here, both answers are accurate. The second is clearly more useful.

Episode 23 of Going Meta, directed by Jesus Barrasa, provides another great example using a legal documents use case, starting from a lexical graph.

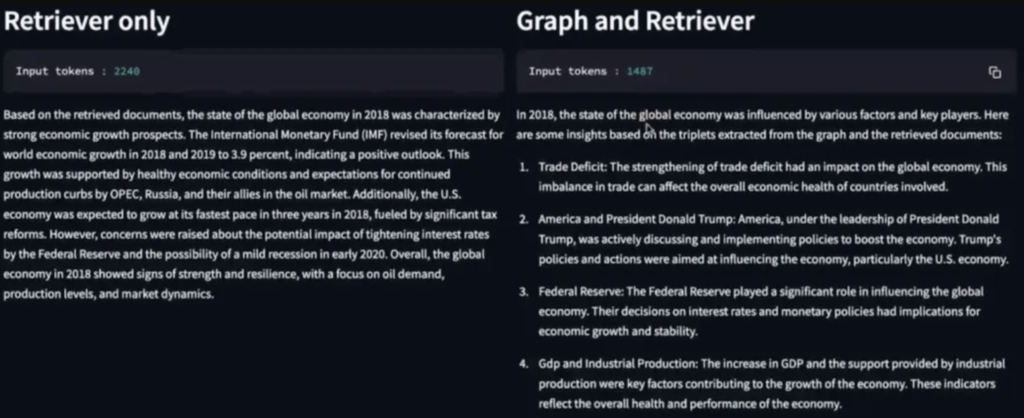

Those who follow the X-sphere and are active on LinkedIn will find new examples emerging frequently not just from the lab, but from the field. Here, Charles Borderie of Lettria gives an example contrasting a vector-only RAG with GraphRAG, comparing an LLM-based text-to-graph pipeline that extracts 10,000 financial articles into a knowledge graph:

As you can see, not only does GraphRAG dramatically improve the quality of the answer compared to plain RAG, it does so with a third fewer tokens required for the answer.

The last notable example I’ll introduce comes from Writer. They recently published a RAG benchmark report based on the RobustQA framework, comparing their GraphRAG-based approach to best-in-class competing tools. GraphRAG scored 86%, a significant improvement over competitors which ranged from 33% to 76%, with comparable or lower latency.

Every week I meet with customers across industries who are seeing the same positive impact from GraphRAG in GenAI applications of all kinds. Knowledge graphs are making GenAI results more accurate and more useful, paving the way for GenAI.

#2: Improve Data Understanding, Accelerate Iteration

Knowledge graphs are intuitive, both conceptually and visually. Being able to explore them often surfaces new insights. An unexpected side benefit many users report is that once they put the effort into creating a knowledge graph, they find it helps them build and debug GenAI applications in unexpected ways. This is in part related to how seeing data as a graph vividly depicts the underlying data of the application. The graph also gives you hooks to trace answers back to data and to follow data along causal chains.

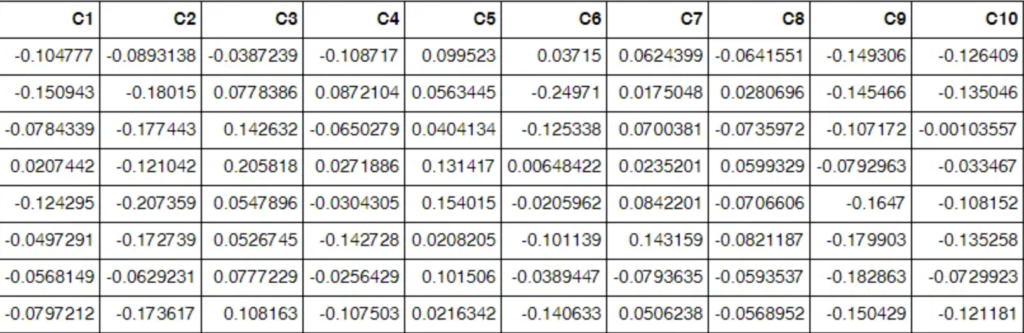

Let’s look at an example using the lithium exposure question from above. If you visualize the vectors, you’ll get something like this, just with many more rows and columns:

When you work with data in graph form, you can understand it in ways that a vector representation simply can’t enable.

Here’s an example from a recent LlamaIndex webinar showing their ability to extract a vectorized block graph (lexical graph) and LLM-extracted entities (domain graph) and relate the two via a “MENTIONS” relationship:

(You can find similar examples in Langchain, Haystack, SpringAI, etc.)

With this graph, you might start to see how having a rich data structure will open up a wealth of new development and debugging possibilities. The individual pieces of data retain their value, while the structure itself stores and conveys additional meaning you can use to add more intelligence to your application.

This isn’t just about visualization. It’s also the effect of structuring data to convey and store meaning. Here’s the reaction of a developer at a well-known fintech company one week after introducing a knowledge graph into their RAG workflow:

This developer’s reaction aligns very well with the test-driven development hypothesis that you verify (rather than believe) whether an answer is correct. Personally, I’m quite uncomfortable with the idea of giving SkyNet 100% autonomy to make completely opaque decisions! But more concretely, even non-AI-pessimists can appreciate the value of being able to see that blocks or documents associated with “Apple, Inc.” shouldn’t actually map to “Apple Corps”. Since data ultimately drives GenAI decision-making, having facilities to evaluate and ensure correctness is nearly essential.

The hypothesis-driven development approach provides a structured way to consolidate ideas and build hypotheses based on objective criteria. Rather than making vague assumptions, it allows developers to test prototypes with users’ feedback before production. This iterative process explores assumptions defined during the project and attempts to validate them, removing uncertainties as the project progresses.

Key steps in hypothesis-driven development include:

- Make observations

- Formulate a hypothesis

- Design an experiment to test the hypothesis

- State indicators to evaluate experiment success

- Conduct the experiment

- Evaluate results and accept or reject the hypothesis

- If necessary, make and test a new hypothesis

By combining practices like hypothesis-driven development and continuous delivery, teams can accelerate experimentation and amplify validated learning. This allows them to innovate faster while reducing costs. The ideal is achieving one-piece flow: atomic changes that identify causal relationships between product changes and their impact on key metrics.

As the Kent Beck quote aptly states, hypothesis-driven development provides a great opportunity to test what you think the problem is before working on the solution. Introducing knowledge graphs into RAG workflows supports this by making the underlying data driving AI decisions more visible and explainable. Together, these approaches enable developers to make GenAI results more accurate, useful, and trustworthy.

The Power of GraphRAG

As we’ve seen, GraphRAG offers significant advantages over pure vector-based RAG approaches:

- Higher accuracy and more complete, useful answers by connecting the dots across pieces of information and summarizing key concepts

- Improved data understanding and faster iteration once a knowledge graph is created, making it easier to build and debug GenAI applications

- Better explainability, traceability, and access control for enhanced governance

Recent studies from companies like data.world and Microsoft have quantified the accuracy improvements, with GraphRAG increasing the accuracy of LLM responses by an average of 3x in one benchmark. LinkedIn also reported a 28.6% reduction in average customer service issue resolution time thanks to the correctness and richness of GraphRAG-powered answers.

The explainability and governance benefits are equally compelling. Knowledge graphs make the reasoning logic inside GenAI pipelines more transparent and the inputs more interpretable compared to opaque LLM decision-making. Capturing provenance and confidence information in the graph can be used for both computation and explanation. Security and privacy can also be significantly enhanced by easily restricting information access based on employee roles using graph permissions.

Building Knowledge Graphs

So what does it take to build a knowledge graph? The two most relevant types for GenAI applications are:

- Domain graphs: A graph representation of the world model relevant to your application

- Lexical graphs: A graph of the document structure, with nodes for each chunk of text

Creating lexical graphs is largely a matter of simple parsing and chunking strategies. For domain graphs, the path depends on whether you’re bringing in data from structured sources, unstructured text, or a mix. Fortunately, tools for creating knowledge graphs from unstructured data sources are rapidly improving, like the new Neo4j Knowledge Graph Builder which can take in PDFs, web pages, YouTube clips or Wikipedia articles and automatically create a knowledge graph.

Data about customers, products, geographies, etc. may exist in structured form somewhere in your enterprise and can be pulled directly from where it lives, commonly in relational databases, using standard tools that follow well-established relational-to-graph mapping rules.

Using Knowledge Graphs

Once you have a knowledge graph, a growing number of frameworks exist for GraphRAG, including the LlamaIndex Property Graph Index, Langchain’s Neo4j integration, Haystack, and others. The field is evolving rapidly, but we’re now at the stage where programmatic approaches are becoming simple.

The same is true on the graph building side, with tools like the Neo4j Importer providing a graphical UI for mapping and importing tabular data into a graph, and Neo4j’s new v1 LLM Knowledge Graph Builder mentioned above.

Another thing you’ll find yourself doing with knowledge graphs is mapping human language questions to graph database queries. A new open source tool from Neo4j called NeoConverse aims to help with natural language querying of graphs, taking a solid first step towards this goal.

While graphs do take some work and learning to get started with, the good news is that it’s getting easier and easier as the tools improve.

Conclusion: GraphRAG is the Next Step for RAG

The word-based computation and linguistic skills inherent in LLMs and vector-based RAG provide good results. To get consistently great results, we need to go beyond strings and capture world models in addition to word models. Just as Google found that to master search, they needed to go beyond pure text analysis and map out the underlying things behind the strings, we’re starting to see the same pattern emerge in the AI world. That pattern is GraphRAG.

Progress follows an S-curve: as one technology peaks, another drives progress further. As GenAI advances, for use cases where answer quality is mission critical; or where explainability is needed for internal, external, or regulatory stakeholders; or where fine-grained control over data access is required to protect privacy and security, there’s a good chance your next GenAI application will use a knowledge graph.

What are the key differences between GraphRAG and traditional RAG systems?

How does GraphRAG improve the accuracy of AI-generated responses?

In what types of applications is GraphRAG particularly beneficial?

GraphRAG excels in domains that require complex data analysis, such as finance, healthcare, and legal sectors. It is ideal for applications like document review, sentiment analysis, and comprehensive reporting where understanding relationships between various entities is crucial.

Can GraphRAG be used with existing data sources?

What future developments can we expect from GraphRAG technology?

Future developments in GraphRAG technology may include advancements in knowledge graph construction, enabling more efficient and accurate data representation. Additionally, researchers are exploring multimodal capabilities to incorporate various data types, such as images and audio, which could further enhance the richness of responses.