LitServe is an innovative service engine designed for seamless deployment of AI models built on FastAPI. With its user-friendly interface and flexible architecture, LitServe allows developers to efficiently manage AI workloads without the hassle of rebuilding servers for each model. Key features such as batch processing, streaming, and GPU auto-scaling make it a powerful choice for enterprises looking to optimize their AI operations.

Key Features of LitServe

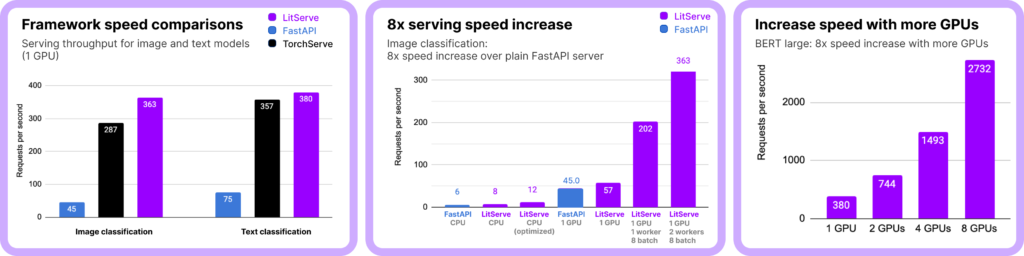

LitServe is meticulously optimized for AI workloads, offering dedicated multi-tasking capabilities that enhance processing speed by at least 200% compared to FastAPI. This significant performance boost is achieved through:

- Efficient Scaling: With batch processing and GPU auto-scaling, LitServe can handle more concurrent requests than both FastAPI and TorchServe, resulting in performance improvements exceeding twofold.

- Versatility Across Tasks: While the results showcased are primarily based on image and text classification ML tasks, LitServe’s advantages extend to various machine learning applications, including embeddings, LLM services, audio processing, segmentation, object detection, and summarization.

You can explore the complete benchmark results here (the higher the score, the better).

Optimized for High-Performance LLM Services

For high-performance LLM services, such as Ollama and VLLM, LitServe is the ideal companion when paired with LitGPT or when constructing a custom VLLM class server. To maximize LLM performance, optimizations like KV caching are recommended, which can be easily implemented through LitServe’s intuitive interface.

Comprehensive Features Beyond Hype

LitServe provides a straightforward and efficient deployment method, allowing users to define different servers tailored to specific AI models.

Simple Installation: Installing the LitServe package is a breeze, making it accessible for developers at all levels.

The recommended way to install LitServe for 99% of users is to use pip:

pip install litserveWe don’t yet have an official conda distribution, but you can still use pip with conda

conda activate your-env

pip install litserveInstall the latest LitServe from master with this command:

pip install git+https://github.com/Lightning-AI/litserve.git@mainUse this to install LitServe for local development or to change the internals and submit a pull request 😉

git clone https://github.com/Lightning-AI/LitServe

cd LitServe

pip install -e '.[all]'Diverse Deployment Examples: From large-scale models to voice, video, and image processing, LitServe supports a wide array of deployment scenarios.

Featured examples

Toy model: Hello world LLMs: Llama 3 (8B), LLM Proxy server, Agent with tool use NLP: Hugging face, BERT, Text embedding API Multimodal: OpenAI Clip, MiniCPM, Phi-3.5 Vision Instruct Audio: Whisper, AudioCraft, StableAudio, Noise cancellation (DeepFilterNet) Vision: Stable diffusion 2, AuraFlow, Flux, Image super resolution (Aura SR) Speech: Text-speech (XTTS V2) Classical ML: Random forest, XGBoost Miscellaneous: Media conversion API (ffmpeg)

Comparative Analysis: Users can assess their personal deployments against LitServe’s cloud deployment solutions, ensuring they choose the best option for their needs.

| Feature | Self Managed | Fully Managed on Studios |

|---|---|---|

| Deployment | ✅ Do it yourself deployment | ✅ One-button cloud deploy |

| Load balancing | ❌ | ✅ |

| Autoscaling | ❌ | ✅ |

| Scale to zero | ❌ | ✅ |

| Multi-machine inference | ❌ | ✅ |

| Authentication | ❌ | ✅ |

| Own VPC | ❌ | ✅ |

| AWS, GCP | ❌ | ✅ |

| Use your own cloud commits | ❌ | ✅ |

Official Resources and Community Support

Official Space: Lightning

The Lightning space hosts a wealth of valuable resources, including popular blogs that guide users on deploying the Phi3.5 Vision API with LitServe and utilizing Flux for image generation APIs.

LitServe is capable of deploying models across multiple modalities, from audio to images to video, ensuring versatility in applications.

Featured Applications

- Image Processing: With tools like Flux, super-resolution, and comfyui, LitServe excels in image-related tasks.

- Multimodal Deployments: Users can deploy complex models, such as Phi3.5 Vision and Phi-3-vision-128k-instruct, effectively.

Best Practices and Insights

Explore a series of insightful blogs that detail the end-to-end process from data handling to service deployment. Each blog entry corresponds to a best practice, providing actionable insights for users.

🌟 Conclusion: I hope this article proves helpful! Thank you for reading! If you appreciate this series, please show your support by liking, sharing, or following, which will help me assess future content directions.

Reference Links:

GitHub: LitServe Repository