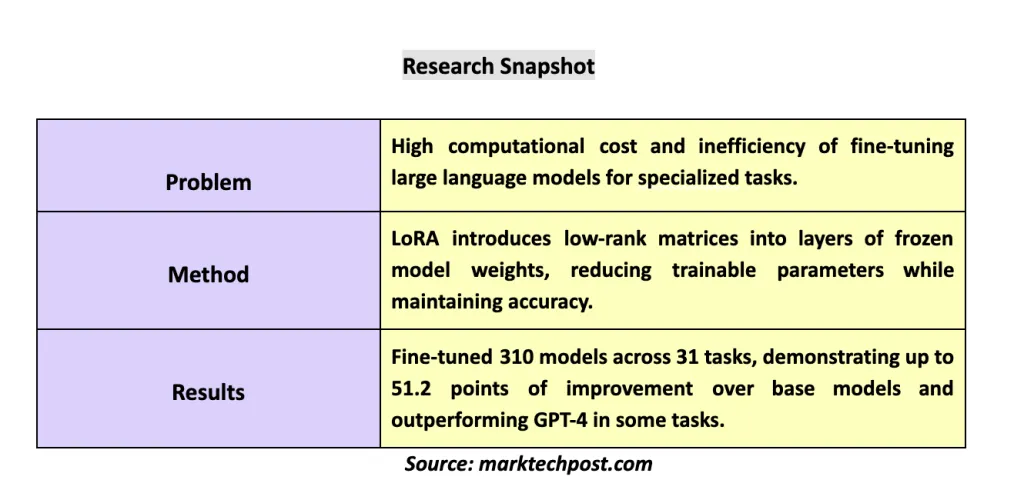

Researchers at Predibase have made a groundbreaking discovery in the field of natural language processing (NLP) by leveraging Low-Rank Adaptation (LoRA) to fine-tune large language models (LLMs). In a comprehensive study spanning 310 models across 31 tasks, they have demonstrated LoRA’s remarkable ability to enhance model efficiency and performance, with some fine-tuned models even surpassing the capabilities of GPT-4.

The Significance of Fine-Tuning LLMs

As NLP continues to evolve, LLMs have become indispensable components in a wide range of applications. However, fine-tuning these models to optimize their performance on specific tasks while minimizing computational demands has posed a significant challenge. Recent advancements, such as LoRA, have emerged as promising solutions to this problem.

LoRA: A Game-Changer in Fine-Tuning

LoRA is a parameter-efficient fine-tuning (PEFT) method that introduces low-rank matrices into existing layers of frozen model weights. This innovative approach reduces the number of trainable parameters, decreases memory usage, and maintains accuracy, enabling specialized models to achieve performance levels comparable to full fine-tuning without requiring a large number of trainable parameters.

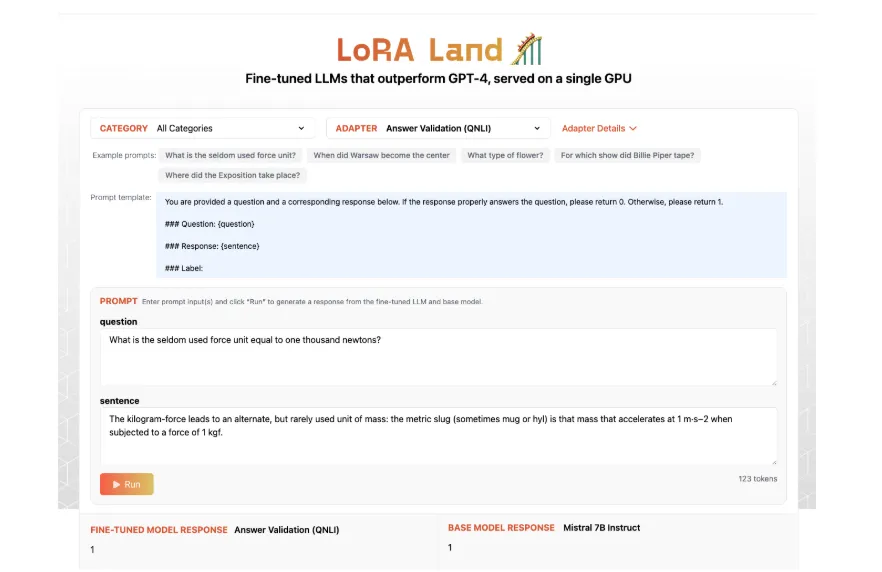

LoRA Land: A Comprehensive Evaluation

The Predibase research team introduced LoRA Land, a comprehensive project designed to evaluate the fine-tuning of LLMs across various tasks. By utilizing 10 base models and 31 tasks, they fine-tuned 310 models, covering classical NLP, coding, knowledge-based reasoning, and math-based problems. This work was supported by LoRAX, an open-source inference server specifically designed to serve multiple LoRA fine-tuned LLMs.

Experimental Results and Findings

The research team conducted experiments using LoRA with 4-bit quantization on base models, achieving remarkable results. They found that models fine-tuned with LoRA outperformed their base models by an average of 34 points, with some models scoring 10 points higher than GPT-4 on various tasks. The researchers meticulously standardized their testing framework to ensure fair model evaluation.

Deployment and Scalability

LoRAX, the inference server used in this study, showcased its ability to manage multiple models simultaneously while maintaining minimal latency. With features like dynamic adapter loading and hierarchical weight caching, LoRAX achieved high concurrency levels, demonstrating the potential for efficient deployment of multiple fine-tuned models.

Implications for the Future of AI

The LoRA Land project highlights the effectiveness of LoRA in fine-tuning large language models, making it suitable for a variety of specialized tasks. The research findings demonstrate LoRA’s efficiency, scalability, and its capability to match or exceed GPT-4 performance in certain domains. This project emphasizes the advantages of specialized LLMs and the feasibility of LoRAX for future AI applications.

Conclusion

The groundbreaking research conducted by Predibase has unveiled the immense potential of LoRA in fine-tuning large language models. By demonstrating significant performance improvements and the ability to outperform GPT-4 on specific tasks, LoRA has emerged as a game-changer in the field of NLP. As the world of AI continues to evolve, the insights gained from this study will undoubtedly shape the future of language model fine-tuning and its applications across various industries.

Paper Download:

Paper link: https://arxiv.org/abs/2405.00732