Open WebUI is an innovative, user-friendly, and feature-rich self-hosted web user interface (UI) designed to operate completely offline. This powerful tool supports various large language models (LLMs) runners, including Ollama and OpenAI-compatible APIs, making it a versatile solution for interacting with AI models.

Key Features

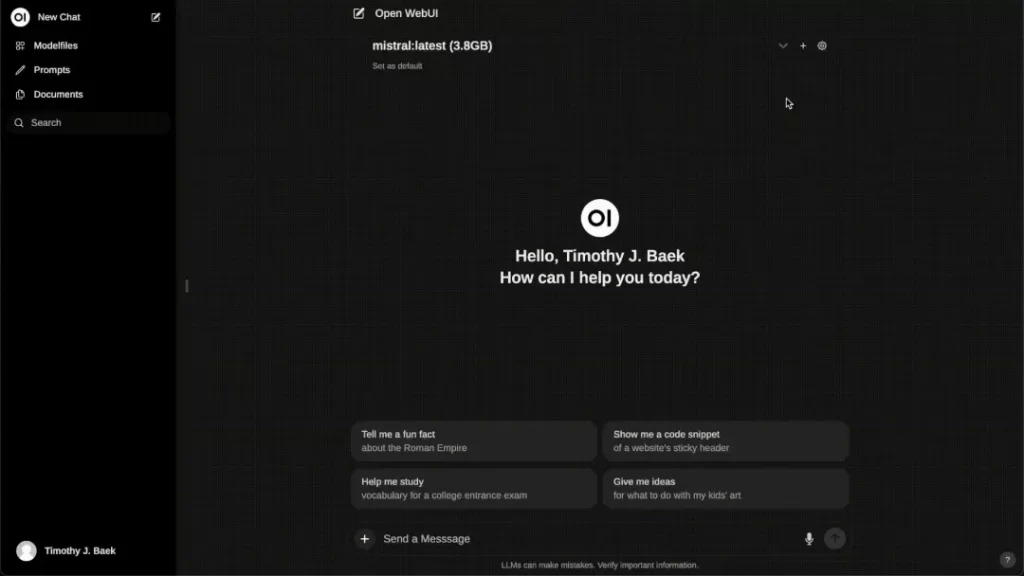

Intuitive User Experience

Open WebUI boasts an intuitive chat interface inspired by ChatGPT, ensuring a seamless and user-friendly experience across both desktop and mobile devices. The responsive design adapts to different screen sizes, while the fast and responsive performance ensures smooth interactions.

Effortless Setup and Customization

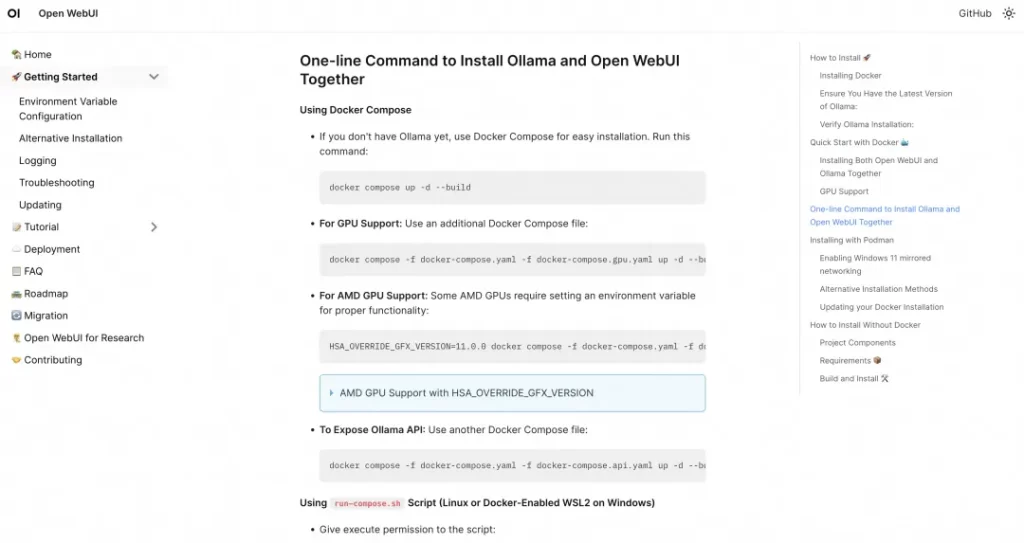

Setting up Open WebUI is a breeze thanks to its compatibility with Docker and Kubernetes (kubectl, kustomize, or helm). Users can personalize their experience by choosing from a variety of themes and enjoy enhanced code readability through syntax highlighting.

Advanced Interaction Capabilities

Open WebUI offers comprehensive support for Markdown and LaTeX, enabling rich interactions. The local Retrieval Augmented Generation (RAG) integration allows users to load documents directly into chats or add files to the document library. Users can also change the RAG embedding model in the document settings to enhance document processing.

Web Browsing and Preset Prompts

The “#” command followed by a URL seamlessly integrates website content into the chat experience. Users can quickly access preset prompts in chat input using the “/” command, streamlining interactions.

RLHF Annotation and Conversation Tagging

Open WebUI supports Reinforcement Learning from Human Feedback (RLHF) by allowing users to rate messages with thumbs up or down and provide textual feedback. Conversation tagging makes it easy to categorize and locate specific chats for quick reference and data collection.

Model Management

Open WebUI simplifies model management with features like downloading or removing models directly from the web UI and updating all locally installed models with a single button click. Users can easily create Ollama models by uploading GGUF files through the web UI.

Multi-Model and Multi-Modal Support

Open WebUI enables seamless switching between different chat models for diverse interactions and supports multi-modal interactions with models like LLava. The model file builder allows users to create Ollama model files effortlessly through the web UI.

Collaborative and Local Chat Features

Users can interact with multiple models simultaneously, leveraging their unique strengths for optimal responses. The “@” command facilitates dynamic, diverse conversations by specifying models. Open WebUI also supports seamless generation and sharing of chat links between users.

Chat History and Management

Open WebUI provides easy access to conversation history, including the ability to review and explore the entire regeneration history. Completed conversations can be archived for future reference, and chat data can be seamlessly imported and exported.

Voice Input and Advanced Features

In addition to text-based interactions, Open WebUI supports voice input, allowing users to engage with models through speech. The platform also offers advanced features such as configurable text-to-speech endpoints, fine-grained control over advanced parameters, image generation integration, OpenAI API integration, multi-user management, webhook integration, model whitelisting, role-based access control (RBAC), and backend reverse proxy support.

Usage Instructions

Important Notes

When using Docker to install Open WebUI, ensure that you include -v open-webui:/app/backend/data in your Docker command. This correctly mounts the database and prevents data loss.

Docker Deployment

Quick Start:

- If Ollama is installed on your local computer, use the following command to start the container:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main- If Ollama is on a different server, change the

OLLAMA_BASE_URLenvironment variable to the server’s URL:

docker run -d -p 3000:8080 -e OLLAMA_BASE_URL=https://example.com -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main- Accessing Open WebUI: After installation, you can access Open WebUI at http://localhost:3000.

- Resolving Connection Issues: If you encounter connection problems, you may need to use the

--network=hostflag when starting the container. This allows the Docker container to directly use the host machine’s network. - Keeping Docker Installation Updated: You can use Watchtower to update your local Docker installation:

docker run --rm --volume /var/run/docker.sock:/var/run/docker.sock containrrr/watchtower --run-once open-webuiOther Installation Methods

In addition to Docker, Open WebUI supports other installation methods such as non-Docker methods, Docker Compose, Kustomize, and Helm. For detailed installation steps, refer to the Open WebUI official documentation (https://docs.openwebui.com/getting-started/) or join their Discord community for assistance.

Conclusion

Open WebUI is a powerful, user-friendly, and feature-rich platform that enables offline interactions with large language models. Its extensive features, customization options, and support for various models make it an ideal choice for users seeking a versatile and intuitive AI interaction experience.

Note: The content in this article is for reference only. For the latest project features, please refer to the official GitHub page.

What is the purpose of Open WebUI?

Open WebUI is designed to provide a user-friendly interface for interacting with large language models (LLMs). It allows users, regardless of technical expertise, to easily engage with various LLMs through a simple web interface. This accessibility opens up opportunities for broader use cases in education, research, and content creation. Official Documentation

How can I install Open WebUI on my system?

To install Open WebUI, you can use several methods, including Docker and Python’s pip package manager. The recommended approach is to use Docker for a seamless installation experience, but detailed instructions for both methods are available in the official documentation, ensuring users can choose the best option for their needs. Installation Guide

What features does Open WebUI offer for LLM interaction?

Open WebUI provides numerous features, including customizable chat interfaces, prompt presets for quick interactions, and advanced error handling tools. These functionalities enhance the user experience, making it easier to utilize LLMs for various applications such as text generation and data analysis. Feature Overview

Is Open WebUI suitable for beginners?

Absolutely! Open WebUI is specifically designed to cater to users of all skill levels. Its intuitive interface allows beginners to start using LLMs without needing programming knowledge, making it an excellent choice for anyone looking to explore AI technologies. User Guide

How does Open WebUI ensure data privacy and security?

Open WebUI prioritizes user privacy by storing all data locally on the user’s device. This design eliminates external data requests, ensuring that user interactions remain confidential and secure. The platform is committed to maintaining strict privacy standards. Privacy Policy