A Groundbreaking Approach to 2D Image Generation

A fascinating new 2D image generation model was presented at CVPR 2024 called Image Neural Field Diffusion Models. This innovative approach trains diffusion models on image neural fields to generate incredibly realistic images with plausible details, without the excessive “beautification” like over-sharpening, over-saturation, or over-stylization seen in other methods. The results closely resemble the quality of images straight out of a camera.

Advantages of Image Neural Fields

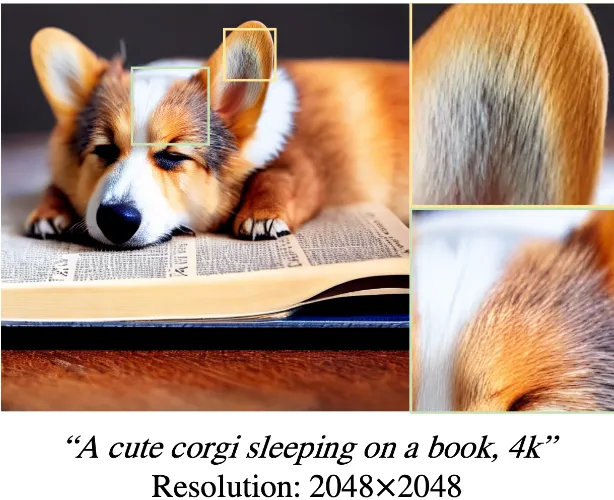

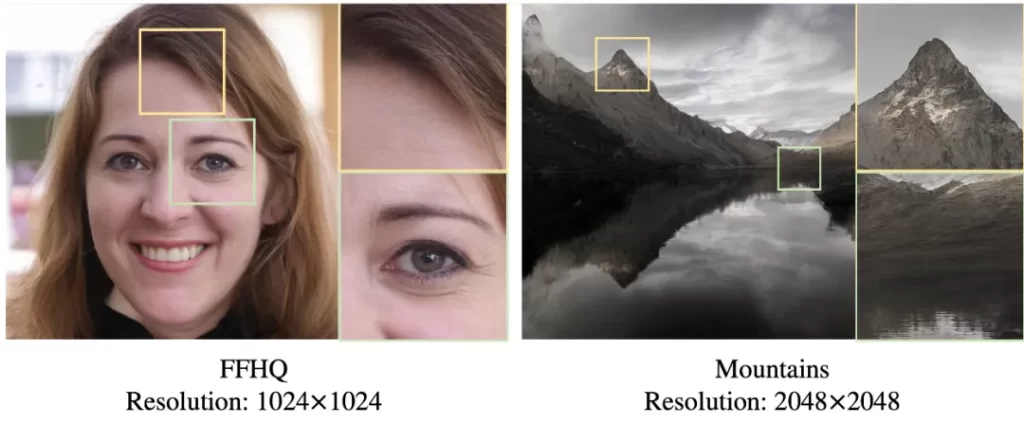

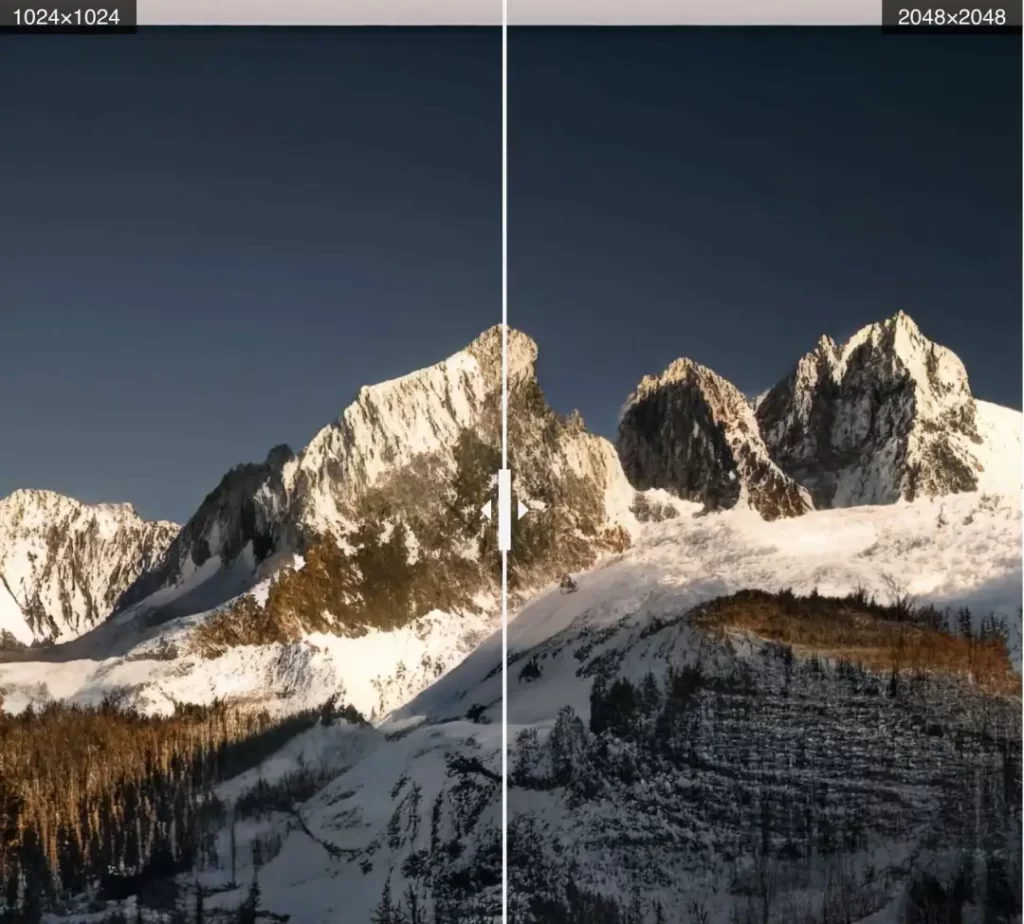

Training on image neural fields enables the model to generate images at any resolution, a significant advantage over fixed-resolution models. The examples below showcase the model’s ability to produce stunningly realistic high-resolution images:

By presenting the generated image neural fields at 2K resolution to single-domain models (left and center) and general text-to-image models (right), effective diffusion can be achieved with only a 64×64 resolution latent representation.

Methodology: Continuous Image Distribution Learning

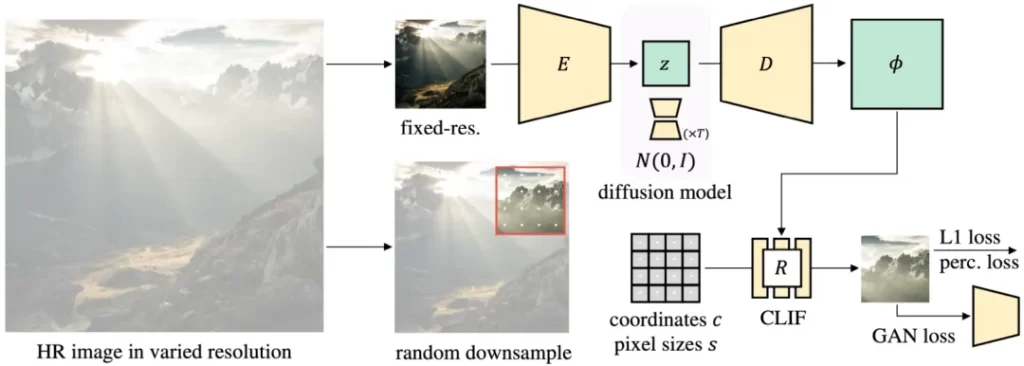

The key challenge is obtaining a latent space that represents photo-realistic image neural fields. The researchers propose a simple yet effective method inspired by recent techniques, with critical changes to make the image neural fields achieve photographic realism.

Their approach converts existing latent diffusion autoencoders into image neural field autoencoders. The image neural field diffusion models can be trained on mixed-resolution image datasets, outperforming fixed-resolution diffusion models followed by super-resolution models. It can also effectively solve inverse problems by applying conditions at different scales.

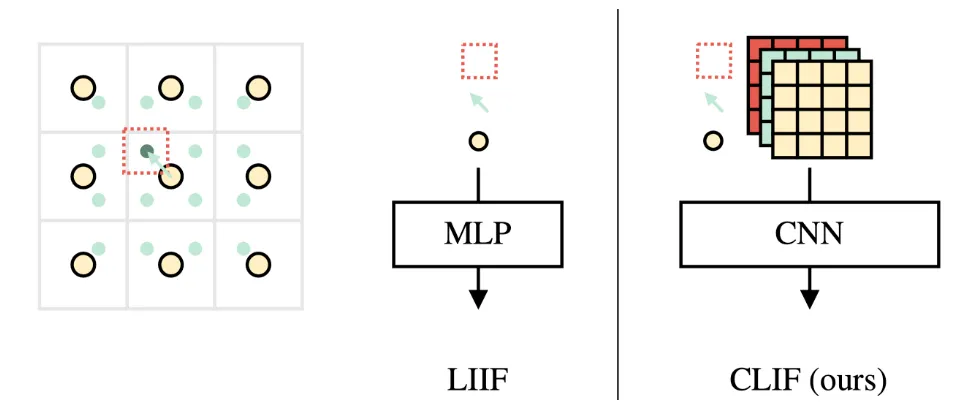

CLIF: Convolutional Local Image Function

Given a feature map, for each query point, the nearest feature vectors are retrieved along with relative coordinates and pixel size. The query information grid is then passed to a convolutional network that renders an RGB grid. Unlike per-point functions like LIIF, CLIF has greater generative power while still learning scale consistency.

Experimental Results

High-Resolution Generation with Compact Latent Models

The model generates 64×64 latent image neural fields with diffusion and renders them at 2048×2048 output resolution (with 256×256 patches).

Scale Consistency of CLIF

Text-to-Image Generation

CLIF rendering is used to fine-tune Stable Diffusion for 2048×2048 resolution outputs.

Arbitrary Scale High-Resolution Image Inversion

The model can solve for high-resolution images satisfying multi-scale conditions, defined as square regions and text prompts. The corresponding regions are rendered at 224×224 and passed to a pre-trained CLIP model running at a fixed resolution. Similarity to the text prompts is maximized, enabling layout-to-image generation without extra task-specific training.

Notably, without image neural fields, fixed-resolution diffusion models would need to decode the entire region at full resolution and pass it as 224×224 input to CLIP, resulting in very dense computation and memory costs.

Conclusion

Image Neural Field Diffusion Models, i.e., diffusion models on resolution-independent latent spaces, demonstrate significant advantages over fixed-resolution models. The proposed simple yet effective framework can easily convert existing latent diffusion models.

This approach can build diffusion models from mixed-resolution datasets, achieve high-resolution image synthesis without extra super-resolution models, and solve inverse problems with conditions applied at different scales of the same image. Image neural fields can be rendered via patches as needed to efficiently compute constraint losses and solve for very high-resolution images.

The resolution-independent image priors learned by the diffusion models open up exciting possibilities for AI image generation that rivals the quality of real photography.

Paper address: https://arxiv.org/pdf/2406.07480

Project homepage: https://yinboc.github.io/infd/?continueFlag=3b219e0161d2199e1feb6de63b4e663d