In the rapidly evolving field of natural language processing (NLP), large language models (LLMs) have emerged as a game-changer, showcasing remarkable performance across a wide range of tasks. However, the deployment of these powerful models has been hindered by their enormous computational and memory requirements during inference. As we move into 2024, the need for more efficient and sustainable LLMs has become increasingly pressing.

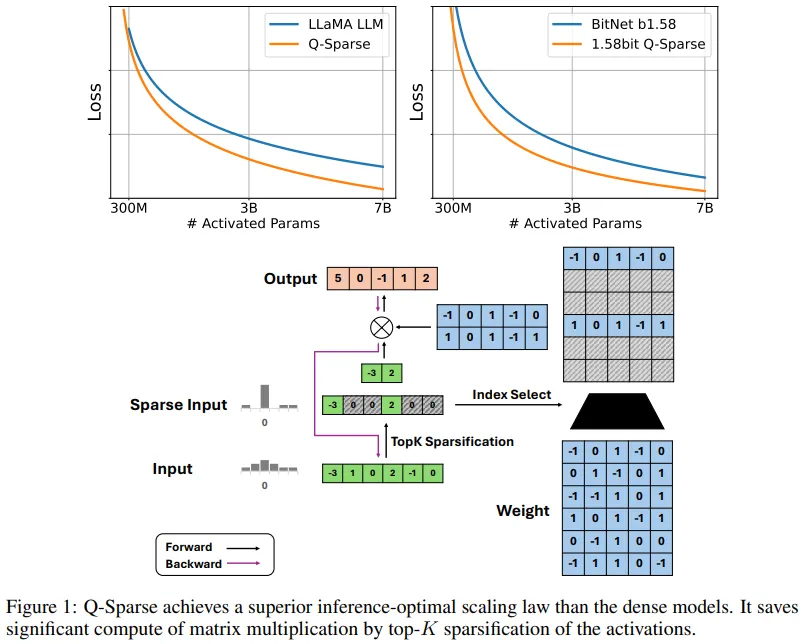

Enter Q-Sparse, a groundbreaking method developed by researchers from Microsoft and the University of Chinese Academy of Sciences. By achieving full activation sparsity through top-K sparsification and employing a straight-through estimator during training, Q-Sparse significantly improves the inference efficiency of LLMs while maintaining performance on par with dense baseline models.

The Efficiency Bottleneck: Challenges in Deploying LLMs

The exceptional performance of LLMs comes at a cost—the staggering computational and memory demands during inference. As Aidan Gomez, co-founder of Cohere, points out, “The computational resources required to train and run these models are immense, limiting their accessibility and scalability.”

Recent research efforts have focused on various techniques to improve LLM efficiency, including quantization, pruning, distillation, and improved decoding. Among these, sparsity has emerged as a key approach, reducing computational costs by skipping zero elements and decreasing I/O transfers between memory and compute units.

However, traditional weight sparsity methods face challenges in GPU parallelization and accuracy loss. Activation sparsity, achieved through techniques like the Mixture-of-Experts (MoE) mechanism, also requires further research to understand its scaling laws compared to dense models.

Q-Sparse: Pioneering Full Activation Sparsity

Q-Sparse tackles these challenges head-on by achieving full sparsity in activations through top-K sparsification and a straight-through estimator (STE). This innovative approach applies a top-K function to activations during matrix multiplication, significantly reducing computational costs and memory footprint.

One of the standout features of Q-Sparse is its compatibility with both full-precision and quantized models, including cutting-edge 1-bit models like BitNet b1.58. By employing squared ReLU in the feed-forward layers, Q-Sparse promotes activation sparsity, while the use of STE during training helps overcome the gradient vanishing problem.

The effectiveness of Q-Sparse has been demonstrated across various settings, including training from scratch, continued training, and fine-tuning. As Sharan Narang, a researcher at Google Brain, notes, “Q-Sparse’s ability to maintain efficiency and performance across diverse training scenarios makes it a versatile and promising approach for LLM optimization.”

Unlocking the Potential of Sparse Activation LLMs

Recent studies have revealed that LLM performance follows a power law relationship with model size and training data. Researchers have discovered that sparse activation LLMs also adhere to this relationship, exhibiting a power law with model size and an exponential law with sparsity ratio.

Experiments have shown that sparse activation models exhibit similar performance scaling with model size as dense models when the sparsity ratio is fixed. Remarkably, the performance gap between sparse and dense models decreases as model size increases. This finding has significant implications for the future of LLMs, as it suggests that sparse models can effectively match or even surpass the performance of dense models with appropriate sparsity levels.

According to the optimal scaling laws for inference, the sweet spot for full-precision models lies at a sparsity ratio of 45.58%, while 1.58-bit models achieve peak performance at a sparsity ratio of 61.25%. These insights provide a roadmap for researchers and practitioners to design and deploy highly efficient LLMs without compromising on performance.

Putting Q-Sparse to the Test

To validate the effectiveness of Q-Sparse, researchers conducted extensive evaluations across various settings. When training from scratch using 50B tokens, Q-Sparse matched the performance of the dense baseline model at a 40% sparsity level. This achievement highlights the potential for significant computational savings without sacrificing model performance.

In the realm of quantized models, the BitNet b1.58 model with Q-Sparse outperformed the dense baseline under the same compute budget. This finding underscores the synergistic effect of combining Q-Sparse with advanced quantization techniques, pushing the boundaries of LLM efficiency.

Continued training of Mistral 7B further demonstrated Q-Sparse’s prowess, achieving performance comparable to the dense baseline model while maintaining higher efficiency. Fine-tuning results were equally impressive, with a Q-Sparse model containing approximately 4B activation parameters performing on par with or better than a dense 7B model.

As Paulius Micikevicius, a principal engineer at NVIDIA, emphasizes, “Q-Sparse’s strong performance across various training scenarios showcases its potential to revolutionize the deployment of LLMs in real-world applications.”

The Future of Efficient LLMs: Q-Sparse and Beyond

The results obtained by combining BitNet b1.58 with Q-Sparse underscore the immense potential for boosting LLM efficiency, particularly in inference. As we look to the future, researchers plan to scale up training with larger model sizes and more tokens, pushing the boundaries of what is possible with sparse activation LLMs.

Integration with techniques like YOCO, which optimizes KV cache management, promises to further enhance the efficiency gains achieved by Q-Sparse. Moreover, Q-Sparse complements other approaches, such as Mixture-of-Experts (MoE), and will undergo batch adaptation to enhance its practicality in real-world deployments.

As the NLP community continues to grapple with the challenges of deploying large language models, Q-Sparse offers a compelling solution. With its ability to achieve full activation sparsity, improve inference efficiency, and maintain performance across various model architectures and training scenarios, Q-Sparse is poised to become a key enabler of efficient and sustainable LLMs in 2024 and beyond.

For more details on this groundbreaking research, read the full paper: Q-Sparse: Boosting Efficiency of Large Language Models Through Full Activation Sparsity