RAG (Retrieval-Augmented Generation) has seemingly become the industry standard for addressing issues like language hallucination and knowledge limitations in large language models (LLMs), with the aim of improving model accuracy and knowledge breadth. But the reality may be far from that simple, especially for RAG developers – don’t be deceived by RAG.

Recently, a research team from Stanford University conducted a groundbreaking study attempting to quantitatively evaluate the “tug-of-war” between LLMs’ internal prior knowledge and retrieved information. Their findings are eye-opening, revealing some little-known mysteries in LLM behaviors.

Research Methodology

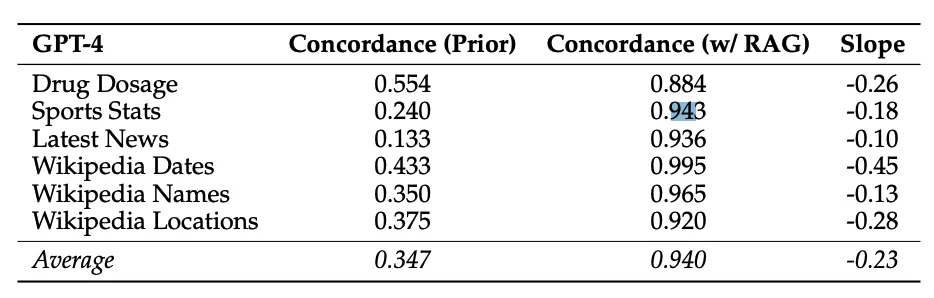

To explore this question, the researchers used rigorous experimental design and meticulous data analysis to systematically evaluate the question-answering capabilities of top LLMs like GPT-4 across six different domain datasets. They tested not only pure model responses without retrieved content, but also introduced various degrees of retrieval content perturbations to examine the robustness of model behaviors.

The research covered 266 drug dosage questions, 192 sports statistics questions, 249 news questions, and 600 Wikipedia questions about dates, names, and cities. It can be said that this is a broad, difficult, and representative set of evaluation tasks.

For each question, the researchers carefully designed 10 different degrees of modified retrieval content versions. For questions requiring numerical answers, they multiplied the correct answer in the reference document by factors like 0.1, 0.2, 0.4, etc., to create erroneous information with varying degrees of deviation from the true value. For classification questions, they prompted GPT-4 itself to generate different levels of erroneous information such as “slight modifications”, “major modifications”, and “absurd modifications”.

Finding 1: LLMs Prefer to Trust Themselves

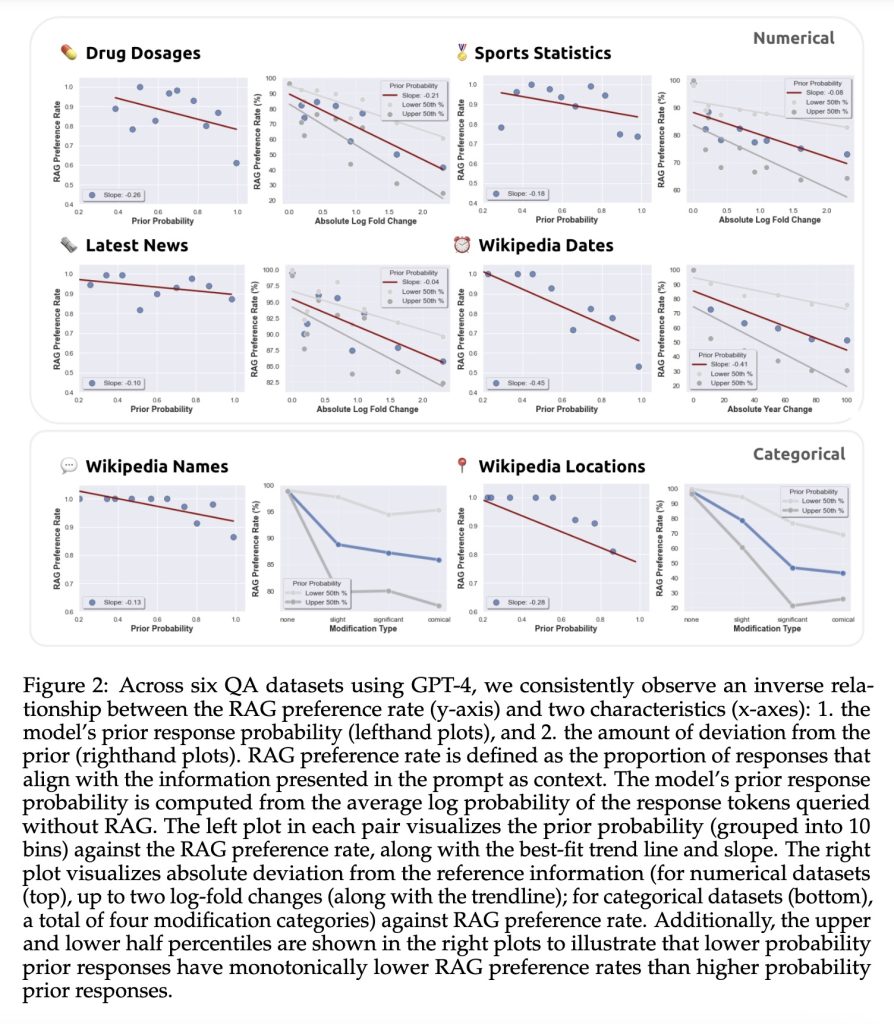

First, the research found that when LLMs are highly confident in their initial responses (i.e., high prior probability), they are more likely to ignore the retrieved erroneous information and stick to their own opinions. Conversely, if the model lacks full confidence in its initial answer, it is more easily misled by incorrect retrieved content.

Source: Wu et al. (2024)

This finding was consistently confirmed across all six datasets. Taking the “drug dosage” dataset as an example, the research showed that for every 10% increase in the model’s prior probability, the likelihood of it adopting the retrieved content decreases by 2.6% accordingly. On the “Wikipedia dates” dataset, this prior dominance effect was even more pronounced, as high as 4.5%.

Finding 2: LLMs Struggle to Accept Increasingly “Absurd” Information

In addition to quantifying the influence of prior probabilities, the researchers discovered another interesting phenomenon: the more “absurd” the retrieved content becomes relative to the LLM’s prior, the less likely the model is to buy it.

For example, in the “news” dataset, when the values in the reference document were only slightly adjusted by around 20%, the LLM had a higher probability of adopting this “slight deviation”. But once the modification reached 3 times or more, entering the “obviously absurd” zone, the LLM was very likely to say no to it.

In the “names” and “cities” datasets, the researchers even subversively fabricated some “absurd to the point of being ridiculous” information, such as changing “Bob Green” to “Blob Lawnface” and “Miami” to “Miameme”. Faced with such preposterous statements, the LLM exhibited reassuring “rationality” and was able to correctly refuse to accept them in most cases.

Finding 3: Prompt Strategies Influence LLMs’ Trade-off Between Prior Knowledge and RAG Retrieval

Another important finding by the researchers is that different prompting strategies significantly affect LLMs’ preference for trade-offs between prior knowledge and retrieved information.

They tested three typical prompting styles: “standard”, “strict”, and “loose”. Among them, the “strict” prompt emphasizes that the LLM must fully trust the retrieved content and not use prior knowledge. The “loose” prompt encourages the LLM to exercise its own judgment and take an open but prudent attitude towards the retrieved content. The “standard” prompt seeks to strike a balance between the two. (You can also use these three prompts as a reference when testing during RAG development.)

The experimental results show that the “strict” prompt can indeed significantly increase the LLM’s preference for retrieved content, making it exhibit a high degree of “compliance”. However, an overly “loose” attitude may cause the LLM to rely more on its own priors while ignoring the value of retrieved information.

Implications and Recommendations

This pioneering research reveals some subtle characteristics exhibited by LLMs in RAG systems. Rather than being passively constrained by retrieved content, LLMs deftly coordinate their own priors and external information in a complex cognitive field, striving to make optimal judgments.

The experimental data indicates that when the model already has strong prior knowledge about a question, even if given more external information, it is still very likely to stick to its own judgment. At the same time, when the retrieval results deviate too far from the prior value and become absurd, the model will activate a self-protection mechanism and rely more on its own opinion. This elusive game relationship manifests differently in different domains.

As prompt engineers, it is crucial for us to discern and grasp these subtle cognitive patterns in order to optimize model training and application strategies. We need to learn to adapt to local conditions and prescribe the right medicine:

- In areas where the model has a high degree of mastery, appropriately let go and give it more autonomy to leverage the power of its inherent knowledge.

- In areas where the model has a lower degree of mastery, provide high-quality external information in a timely manner as a supplement and guide to expand its cognitive boundaries.

At the same time, we must continue to innovate prompt writing techniques. Through clever language guidance and constraints, find a balance between encouraging the model to boldly absorb new knowledge and carefully discerning erroneous information, shaping a more intelligent and knowledgeable AI assistant.