In a stunning overnight coup, the AI world has witnessed the dethroning of its reigning champion – the once-dominant Transformer architecture now finds its position precariously threatened. The usurper? A dynamic new contender known as Test-Time Training, or TTT for short.

Introducing TTT: The RNN Layer Replacing Self-Attention

At its core, TTT is an innovative RNN layer boasting highly expressive hidden states. Its claim to fame? The remarkable ability to replace the self-attention mechanism that forms the backbone of Transformer models.

This groundbreaking new architecture, the brainchild of researchers from Stanford, UC Berkeley, UC San Diego, and Meta, has sent shockwaves rippling through the AI community. It’s an understatement to say it has captured the attention of the field.

The Achilles’ Heel of Transformers and Mamba

While Transformers have long reigned supreme in handling lengthy text sequences, this prowess comes at a steep price. The quadratic computational complexity of the self-attention layer renders it prohibitively expensive at scale.

Enter Mamba, a valiant attempt to address this limitation with its RNN layer. However, Mamba’s approach of compressing hidden states to a fixed size at each time step severely constrains its expressiveness when grappling with extensive text.

The Eureka Moment: Making Hidden States Learnable

Faced with this conundrum, the researchers behind TTT had a stroke of genius: What if we could imbue hidden states with the same learning capabilities as the model itself?

Thus, TTT was born, ingeniously morphing RNN hidden states into miniature machine learning models in their own right. By defining the update rule as a self-supervised learning step, TTT elegantly compresses context into model weights, facilitating parameter learning.

This innovative approach effectively trains the hidden states on the test sequence itself, earning the moniker “test-time training.” The result? TTT boasts linear complexity and unparalleled expressiveness in its hidden states.

Evaluating TTT: A Commanding Performance

To put TTT through its paces, the team proposed two variants: TTT-Linear and TTT-MLP, differing in their hidden state representations (linear models and multi-layer perceptrons, respectively).

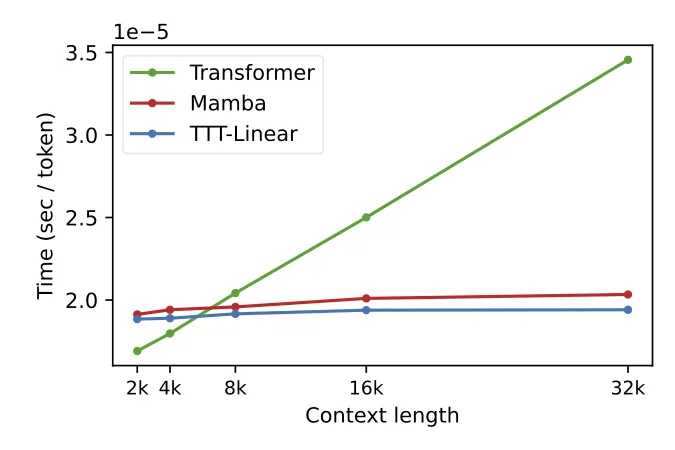

Pitting these against Transformers and Mamba across a spectrum of model scales (125M to 1.3B parameters), the results were nothing short of remarkable. TTT-Linear consistently outshone its rivals in perplexity and computational efficiency, particularly in long-context scenarios.

TTT-MLP, while slightly lagging behind TTT-Linear in shorter contexts, truly came into its own as the sequence length grew, showcasing the superior expressiveness of MLPs over linear models.

Across the board, from the massive The Pile dataset to the Books subset, TTT’s performance was exemplary. Even when stacked up against fine-tuned Transformers, TTT held its ground and often emerged victorious.

Optimizing TTT: Efficiency Gains and Future Directions

Not content to rest on their laurels, the researchers further optimized TTT’s hardware efficiency. Through techniques like small-batch TTT and dual form, they achieved speedups surpassing Transformers and matching Mamba at the 8k context length mark. As the context size increased, the gap only widened in TTT’s favor.

While TTT-Linear and TTT-MLP have already set a high bar, the team acknowledges that challenges remain, particularly in terms of memory I/O for TTT-MLP. However, this only underscores the immense potential waiting to be unlocked.

The path forward is clear: By further optimizing TTT’s memory utilization and parallel computing capabilities, we can expect even more groundbreaking advances in the near future. The resounding success of TTT-Linear and TTT-MLP is just the beginning – these architectures are poised to be the shining stars of AI research for years to come.

🔗 Project Link: https://github.com/test-time-training/ttt-lm-jax